Software Engineering

Covers software design, verification, validation, documentation, and software development tools.

Looking for a broader view? This category is part of:

Covers software design, verification, validation, documentation, and software development tools.

Looking for a broader view? This category is part of:

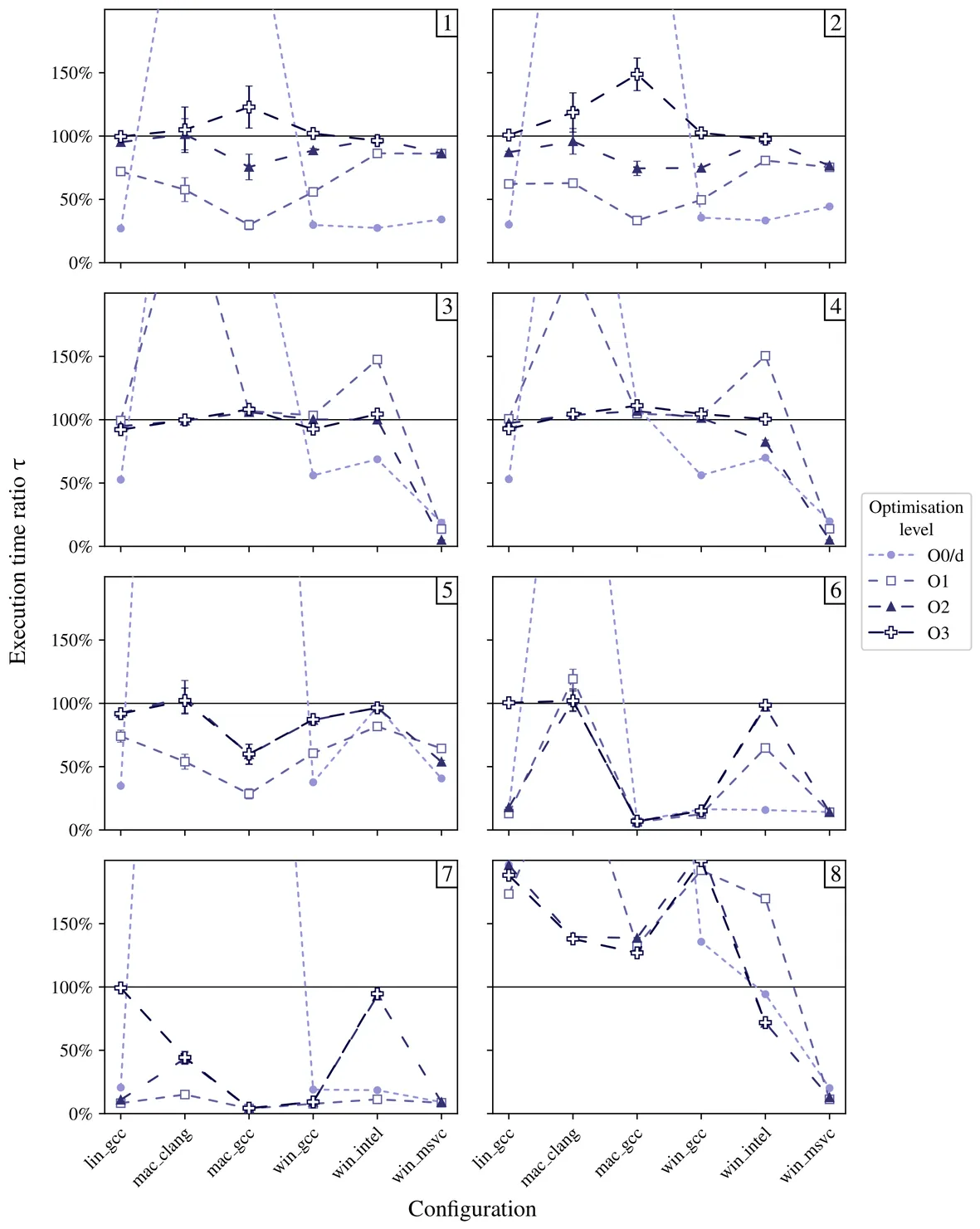

A cross-configuration benchmark is proposed to explore the capacities and limitations of AVX / NEON intrinsic functions in a generic context of development project, when a vectorisation strategy is required to optimise the code. The main aim is to guide developers to choose when using intrinsic functions, depending on the OS, architecture and/or available compiler. Intrinsic functions were observed highly efficient in conditional branching, with intrinsic version execution time reaching around 5% of plain code execution time. However, intrinsic functions were observed as unnecessary in many cases, as the compilers already well auto-vectorise the code.

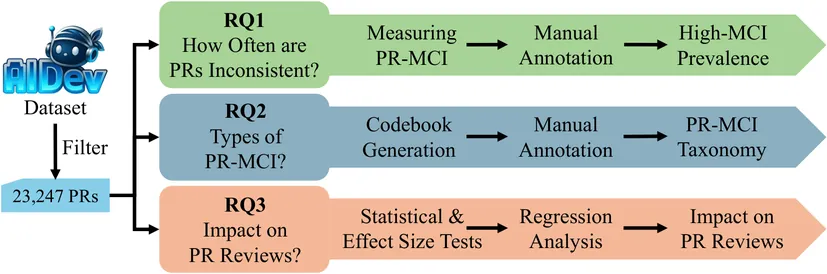

Pull request (PR) descriptions generated by AI coding agents are the primary channel for communicating code changes to human reviewers. However, the alignment between these messages and the actual changes remains unexplored, raising concerns about the trustworthiness of AI agents. To fill this gap, we analyzed 23,247 agentic PRs across five agents using PR message-code inconsistency (PR-MCI). We contributed 974 manually annotated PRs, found 406 PRs (1.7%) exhibited high PR-MCI, and identified eight PR-MCI types, revealing that descriptions claiming unimplemented changes was the most common issue (45.4%). Statistical tests confirmed that high-MCI PRs had 51.7% lower acceptance rates (28.3% vs. 80.0%) and took 3.5x longer to merge (55.8 vs. 16.0 hours). Our findings suggest that unreliable PR descriptions undermine trust in AI agents, highlighting the need for PR-MCI verification mechanisms and improved PR generation to enable trustworthy human-AI collaboration.

This paper presents a longitudinal ethical analysis of Untappd, a social drinking application that gamifies beer consumption through badges, streaks, and social sharing. Building on an exploratory study conducted in 2020, we revisit the platform in 2025 to examine how its gamification features and ethical framings have evolved. Drawing on traditional ethical theory and practical frameworks for Software Engineering, we analyze five categories of badges and their implications for user autonomy and well-being. Our findings show that, despite small adjustments and superficial disclaimers, many of the original ethical issues remain. We argue for continuous ethical reflection built embedded into software lifecycles to prevent the normalization of risky behaviors through design.

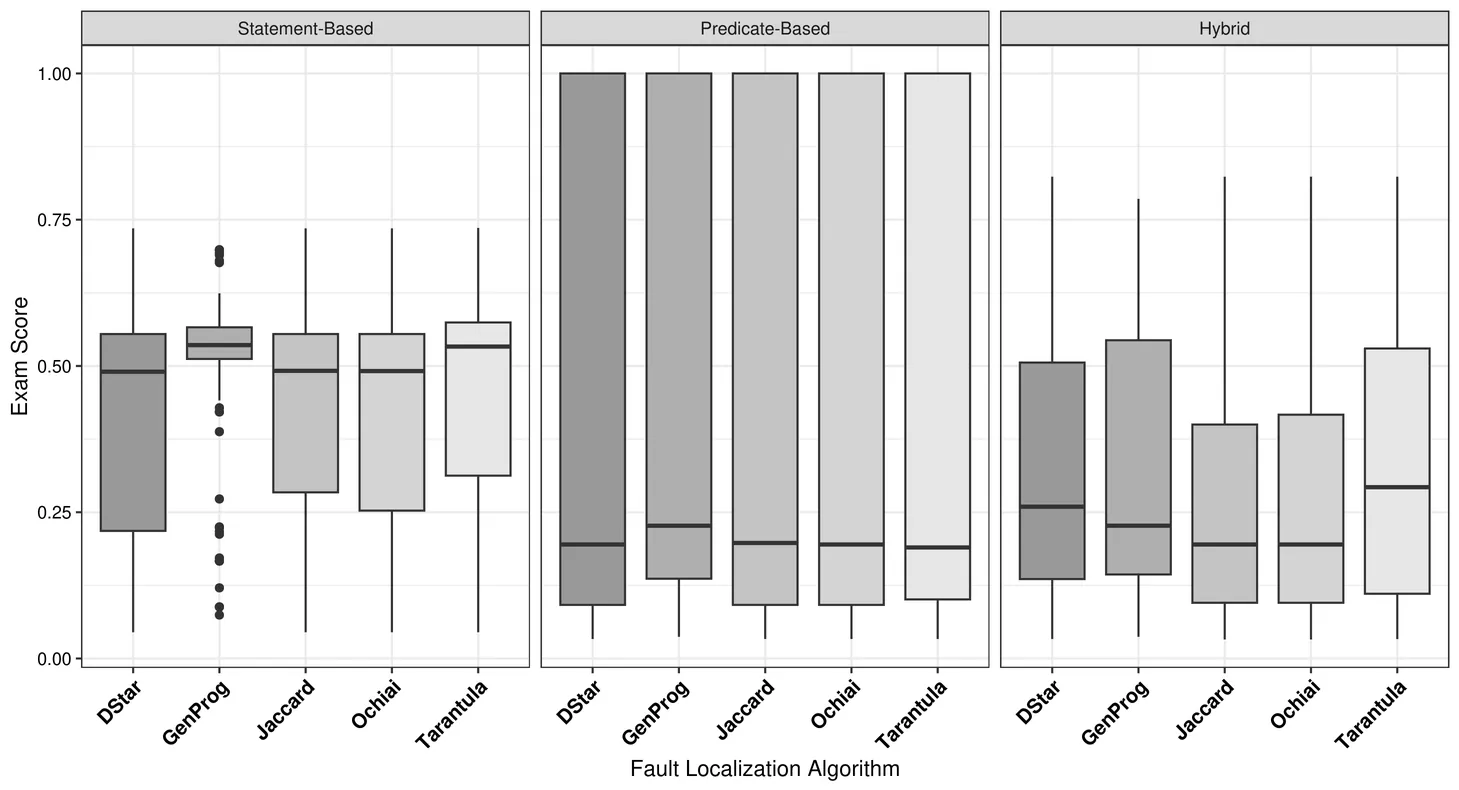

This paper introduces DDMIN-LOC, a technique that combines Delta Debugging Minimization (DDMIN) with Spectrum-Based Fault Localization (SBFL). It can be applied to programs taking string inputs, even when only a single failure-inducing input is available. DDMIN is an algorithm that systematically explores the minimal failure-inducing input that exposes a bug, given an initial failing input. However, it does not provide information about the faulty statements responsible for the failure. DDMIN-LOC addresses this limitation by collecting the passing and failing inputs generated during the DDMIN process and computing suspiciousness scores for program statements and predicates using SBFL algorithms. These scores are then combined to rank statements according to their likelihood of being faulty. DDMIN-LOC requires only one failing input of the buggy program, although it can be applied only to programs that take string inputs. DDMIN-LOC was evaluated on 136 programs selected from the QuixBugs and Codeflaws benchmarks using the SBFL algorithms Tarantula, Ochiai, GenProg, Jaccard and DStar. Experimental results show that DDMIN-LOC performs best with Jaccard: in most subjects, fewer than 20% executable lines need to be examined to locate the faulty statements. Moreover, in most subjects, faulty statements are ranked within the top 3 positions in all the generated test suites derived from different failing inputs.

We deployed an LLM agent with ReAct reasoning and full data access. It executed flawlessly, yet when asked "Why is completion rate 80%?", it returned metrics instead of causal explanation. The agent knew how to reason but we had not specified what to reason about. This reflects a gap: runtime reasoning frameworks (ReAct, Chain-of-Thought) have transformed LLM agents, but design-time specification--determining what domain knowledge agents need--remains under-explored. We propose 4D-ARE (4-Dimensional Attribution-Driven Agent Requirements Engineering), a preliminary methodology for specifying attribution-driven agents. The core insight: decision-makers seek attribution, not answers. Attribution concerns organize into four dimensions (Results -> Process -> Support -> Long-term), motivated by Pearl's causal hierarchy. The framework operationalizes through five layers producing artifacts that compile directly to system prompts. We demonstrate the methodology through an industrial pilot deployment in financial services. 4D-ARE addresses what agents should reason about, complementing runtime frameworks that address how. We hypothesize systematic specification amplifies the power of these foundational advances. This paper presents a methodological proposal with preliminary industrial validation; rigorous empirical evaluation is planned for future work.

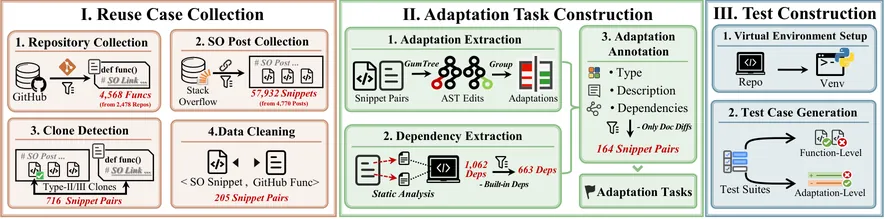

Recent advancements in large language models (LLMs) have automated various software engineering tasks, with benchmarks emerging to evaluate their capabilities. However, for adaptation, a critical activity during code reuse, there is no benchmark to assess LLMs' performance, leaving their practical utility in this area unclear. To fill this gap, we propose AdaptEval, a benchmark designed to evaluate LLMs on code snippet adaptation. Unlike existing benchmarks, AdaptEval incorporates the following three distinctive features: First, Practical Context. Tasks in AdaptEval are derived from developers' practices, preserving rich contextual information from Stack Overflow and GitHub communities. Second, Multi-granularity Annotation. Each task is annotated with requirements at both task and adaptation levels, supporting the evaluation of LLMs across diverse adaptation scenarios. Third, Fine-grained Evaluation. AdaptEval includes a two-tier testing framework combining adaptation-level and function-level tests, which enables evaluating LLMs' performance across various individual adaptations. Based on AdaptEval, we conduct the first empirical study to evaluate six instruction-tuned LLMs and especially three reasoning LLMs on code snippet adaptation. Experimental results demonstrate that AdaptEval enables the assessment of LLMs' adaptation capabilities from various perspectives. It also provides critical insights into their current limitations, particularly their struggle to follow explicit instructions. We hope AdaptEval can facilitate further investigation and enhancement of LLMs' capabilities in code snippet adaptation, supporting their real-world applications.

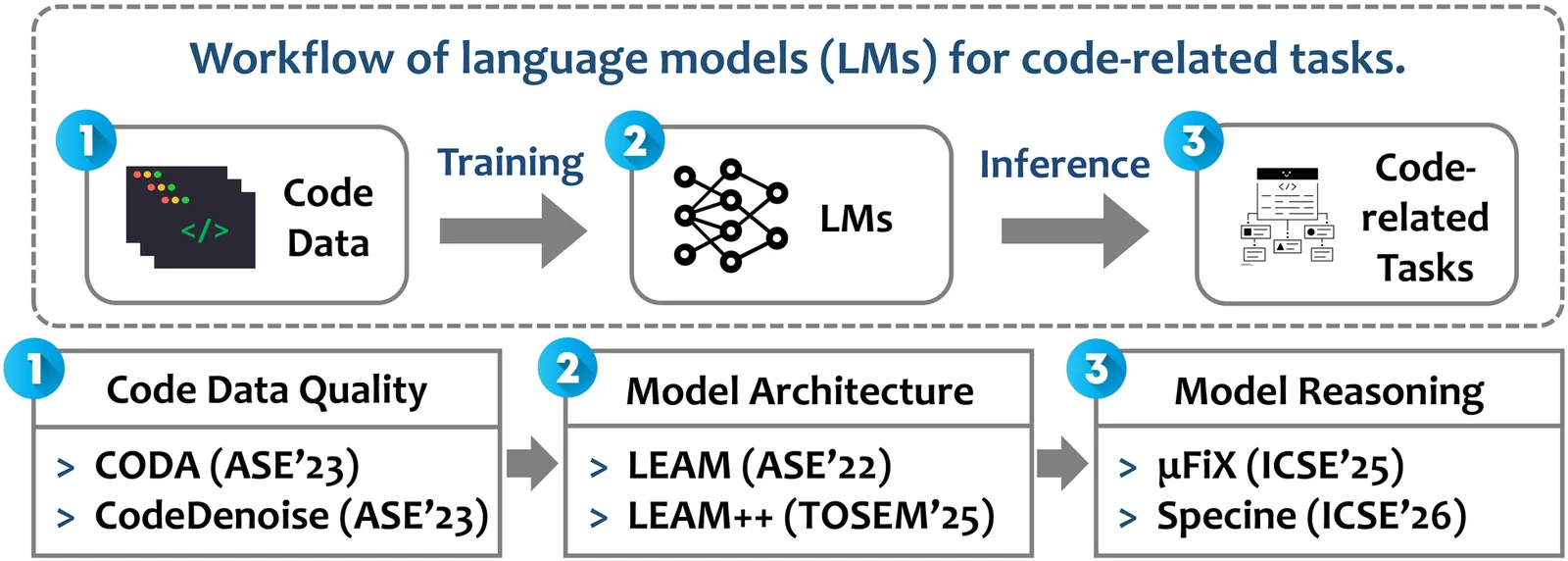

Recent advances in language models (LMs) have driven significant progress in various software engineering tasks. However, existing LMs still struggle with complex programming scenarios due to limitations in data quality, model architecture, and reasoning capability. This research systematically addresses these challenges through three complementary directions: (1) improving code data quality with a code difference-guided adversarial augmentation technique (CODA) and a code denoising technique (CodeDenoise); (2) enhancing model architecture via syntax-guided code LMs (LEAM and LEAM++); and (3) advancing model reasoning with a prompting technique (muFiX) and an agent-based technique (Specine). These techniques aim to promote the practical adoption of LMs in software development and further advance intelligent software engineering.

2601.04124

2601.04124Issue Tracking Systems (ITSs) enable software developers and managers to collect and resolve issues collaboratively. While researchers have extensively analysed ITS data to automate or assist specific activities such as issue assignments, duplicate detection, or priority prediction, developer studies on ITSs remain rare. Particularly, little is known about the challenges Software Engineering (SE) teams encounter in ITSs and when certain practices and workarounds (such as leaving issue fields like "priority" empty) are considered problematic. To fill this gap, we conducted an in-depth interview study with 26 experienced SE practitioners from different organisations and industries. We asked them about general problems encountered, as well as the relevance of 31 ITS smells (aka potentially problematic practices) discussed in the literature. By applying Thematic Analysis to the interview notes, we identified 14 common problems including issue findability, zombie issues, workflow bloat, and lack of workflow enforcement. Participants also stated that many of the ITS smells do not occur or are not problematic. Our results suggest that ITS problems and smells are highly dependent on context factors such as ITS configuration, workflow stage, and team size. We also discuss potential tooling solutions to configure, monitor, and visualise ITS smells to cope with these challenges.

As Autonomous Driving Systems (ADS) progress towards commercial deployment, there is an increasing focus on ensuring their safety and reliability. While considerable research has been conducted on testing methods for detecting faults in ADS, very little attention has been paid to debugging in ADS. Debugging is an essential process that follows test failures to localise and repair the faults in the systems to maintain their safety and reliability. This Systematic Mapping Study (SMS) aims to provide a detailed overview of the current landscape of ADS debugging, highlighting existing approaches and identifying gaps in research. The study also proposes directions for future work and standards for problem definition and terminology in the field. Our findings reveal various methods for ADS debugging and highlight the current fragmented yet promising landscape.

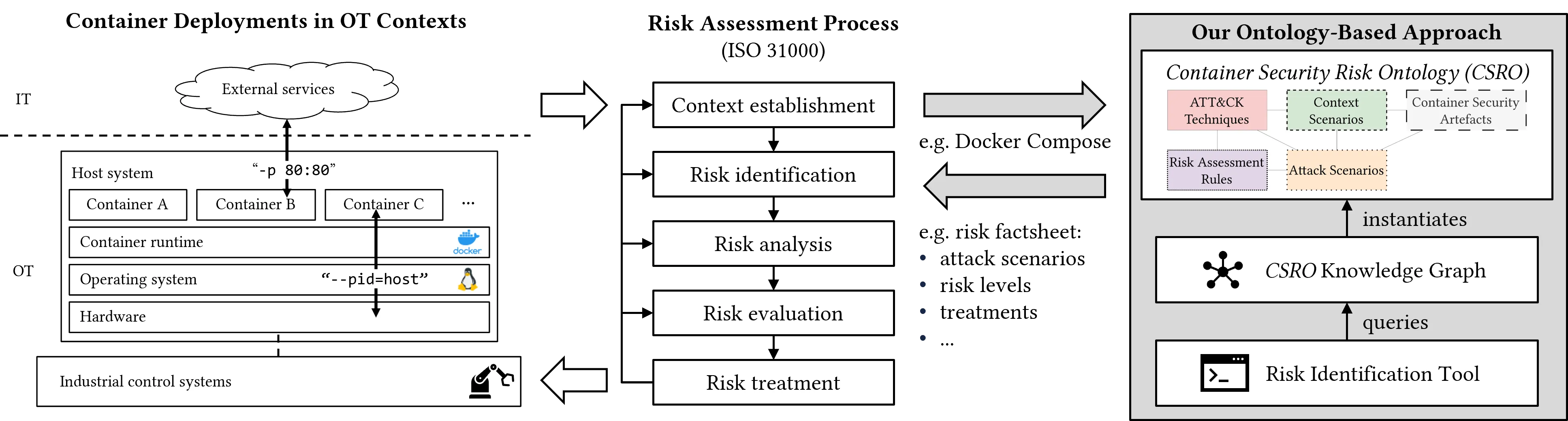

In operational technology (OT) contexts, containerised applications often require elevated privileges to access low-level network interfaces or perform administrative tasks such as application monitoring. These privileges reduce the default isolation provided by containers and introduce significant security risks. Security risk identification for OT container deployments is challenged by hybrid IT/OT architectures, fragmented stakeholder knowledge, and continuous system changes. Existing approaches lack reproducibility, interpretability across contexts, and technical integration with deployment artefacts. We propose a model-based approach, implemented as the Container Security Risk Ontology (CSRO), which integrates five key domains: adversarial behaviour, contextual assumptions, attack scenarios, risk assessment rules, and container security artefacts. Our evaluation of CSRO in a case study demonstrates that the end-to-end formalisation of risk calculation, from artefact to risk level, enables automated and reproducible risk identification. While CSRO currently focuses on technical, container-level treatment measures, its modular and flexible design provides a solid foundation for extending the approach to host-level and organisational risk factors.

2601.03988

2601.03988Background: Extracting the stages that structure Machine Learning (ML) pipelines from source code is key for gaining a deeper understanding of data science practices. However, the diversity caused by the constant evolution of the ML ecosystem (e.g., algorithms, libraries, datasets) makes this task challenging. Existing approaches either depend on non-scalable, manual labeling, or on ML classifiers that do not properly support the diversity of the domain. These limitations highlight the need for more flexible and reliable solutions. Objective: We evaluate whether Small Language Models (SLMs) can leverage their code understanding and classification abilities to address these limitations, and subsequently how they can advance our understanding of data science practices. Method: We conduct a confirmatory study based on two reference works selected for their relevance regarding current state-of-the-art's limitations. First, we compare several SLMs using Cochran's Q test. The best-performing model is then evaluated against the reference studies using two distinct McNemar's tests. We further analyze how variations in taxonomy definitions affect performance through an additional Cochran's Q test. Finally, a goodness-of-fit analysis is conducted using Pearson's chi-squared tests to compare our insights on data science practices with those from prior studies.

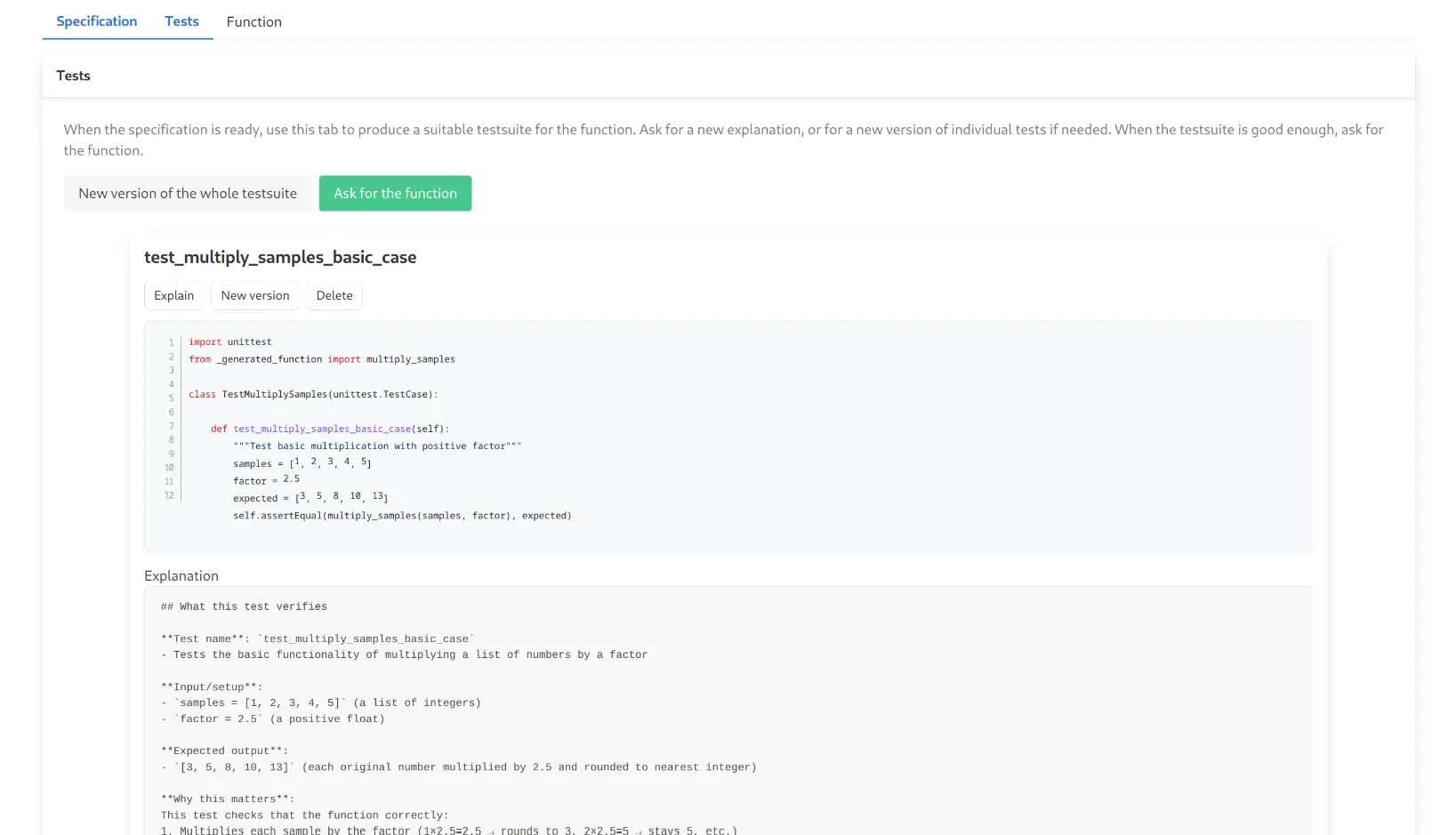

Large Language Models (LLMs) are increasingly integrated into software development workflows, yet their behavior in structured, specification-driven processes remains poorly understood. This paper presents an empirical study design using CURRANTE, a Visual Studio Code extension that enables a human-in-the-loop workflow for LLM-assisted code generation. The tool guides developers through three sequential stages--Specification, Tests, and Function--allowing them to define requirements, generate and refine test suites, and produce functions that satisfy those tests. Participants will solve medium-difficulty problems from the LiveCodeBench dataset, while the tool records fine-grained interaction logs, effectiveness metrics (e.g., pass rate, all-pass completion), efficiency indicators (e.g., time-to-pass), and iteration behaviors. The study aims to analyze how human intervention in specification and test refinement influences the quality and dynamics of LLM-generated code. The results will provide empirical insights into the design of next-generation development environments that align human reasoning with model-driven code generation.

2601.03857

2601.03857LLMs are increasingly used to boost productivity and support software engineering tasks. However, when applied to socially sensitive decisions such as team composition and task allocation, they raise concerns of fairness. Prior studies have revealed that LLMs may reproduce stereotypes; however, these analyses remain exploratory and examine sensitive attributes in isolation. This study investigates whether LLMs exhibit bias in team composition and task assignment by analyzing the combined effects of candidates' country and pronouns. Using three LLMs and 3,000 simulated decisions, we find systematic disparities: demographic attributes significantly shaped both selection likelihood and task allocation, even when accounting for expertise-related factors. Task distributions further reflected stereotypes, with technical and leadership roles unevenly assigned across groups. Our findings indicate that LLMs exacerbate demographic inequities in software engineering contexts, underscoring the need for fairness-aware assessment.

Large Language Models (LLMs) such as GPT-4, Claude and LLaMA have shown impressive performance in code generation, typically evaluated using benchmarks (e.g., HumanEval). However, effective code generation requires models to understand and apply a wide range of language concepts. If the concepts exercised in benchmarks are not representative of those used in real-world projects, evaluations may yield incomplete. Despite this concern, the representativeness of code concepts in benchmarks has not been systematically examined. To address this gap, we present the first empirical study that analyzes the representativeness of code generation benchmarks through the lens of Knowledge Units (KUs) - cohesive sets of programming language capabilities provided by language constructs and APIs. We analyze KU coverage in two widely used Python benchmarks, HumanEval and MBPP, and compare them with 30 real-world Python projects. Our results show that each benchmark covers only half of the identified 20 KUs, whereas projects exercise all KUs with relatively balanced distributions. In contrast, benchmark tasks exhibit highly skewed KU distributions. To mitigate this misalignment, we propose a prompt-based LLM framework that synthesizes KU-based tasks to rebalance benchmark KU distributions and better align them with real-world usage. Using this framework, we generate 440 new tasks and augment existing benchmarks. The augmented benchmarks substantially improve KU coverage and achieve over a 60% improvement in distributional alignment. Evaluations of state-of-the-art LLMs on these augmented benchmarks reveal consistent and statistically significant performance drops (12.54-44.82%), indicating that existing benchmarks overestimate LLM performance due to their limited KU coverage. Our findings provide actionable guidance for building more realistic evaluations of LLM code-generation capabilities.

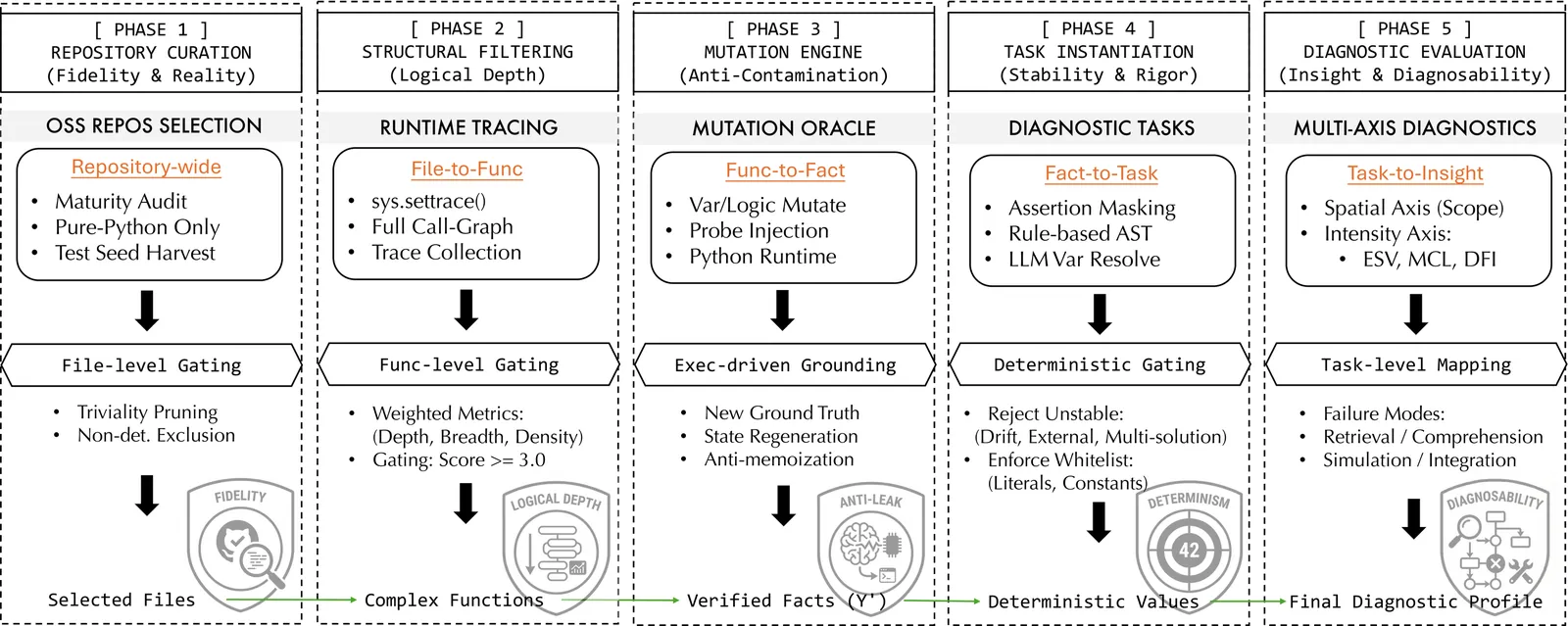

As large language models (LLMs) evolve into autonomous agents, evaluating repository-level reasoning, the ability to maintain logical consistency across massive, real-world, interdependent file systems, has become critical. Current benchmarks typically fluctuate between isolated code snippets and black-box evaluations. We present RepoReason, a white-box diagnostic benchmark centered on abductive assertion verification. To eliminate memorization while preserving authentic logical depth, we implement an execution-driven mutation framework that utilizes the environment as a semantic oracle to regenerate ground-truth states. Furthermore, we establish a fine-grained diagnostic system using dynamic program slicing, quantifying reasoning via three orthogonal metrics: $ESV$ (reading load), $MCL$ (simulation depth), and $DFI$ (integration width). Comprehensive evaluations of frontier models (e.g., Claude-4.5-Sonnet, DeepSeek-v3.1-Terminus) reveal a prevalent aggregation deficit, where integration width serves as the primary cognitive bottleneck. Our findings provide granular white-box insights for optimizing the next generation of agentic software engineering.

Many real-world software tasks require exact transcription of provided data into code, such as cryptographic constants, protocol test vectors, allowlists, and calibration tables. These tasks are operationally sensitive because small omissions or alterations can remain silent while producing syntactically valid programs. This paper introduces a deliberately minimal transcription-to-code benchmark to isolate this reliability concern in LLM-based code generation. Given a list of high-precision decimal constants, a model must generate Python code that embeds the constants verbatim and performs a simple aggregate computation. We describe the prompting variants, evaluation protocol based on exact-string inclusion, and analysis framework used to characterize state-tracking and long-horizon generation failures. The benchmark is intended as a compact stress test that complements existing code-generation evaluations by focusing on data integrity rather than algorithmic reasoning.

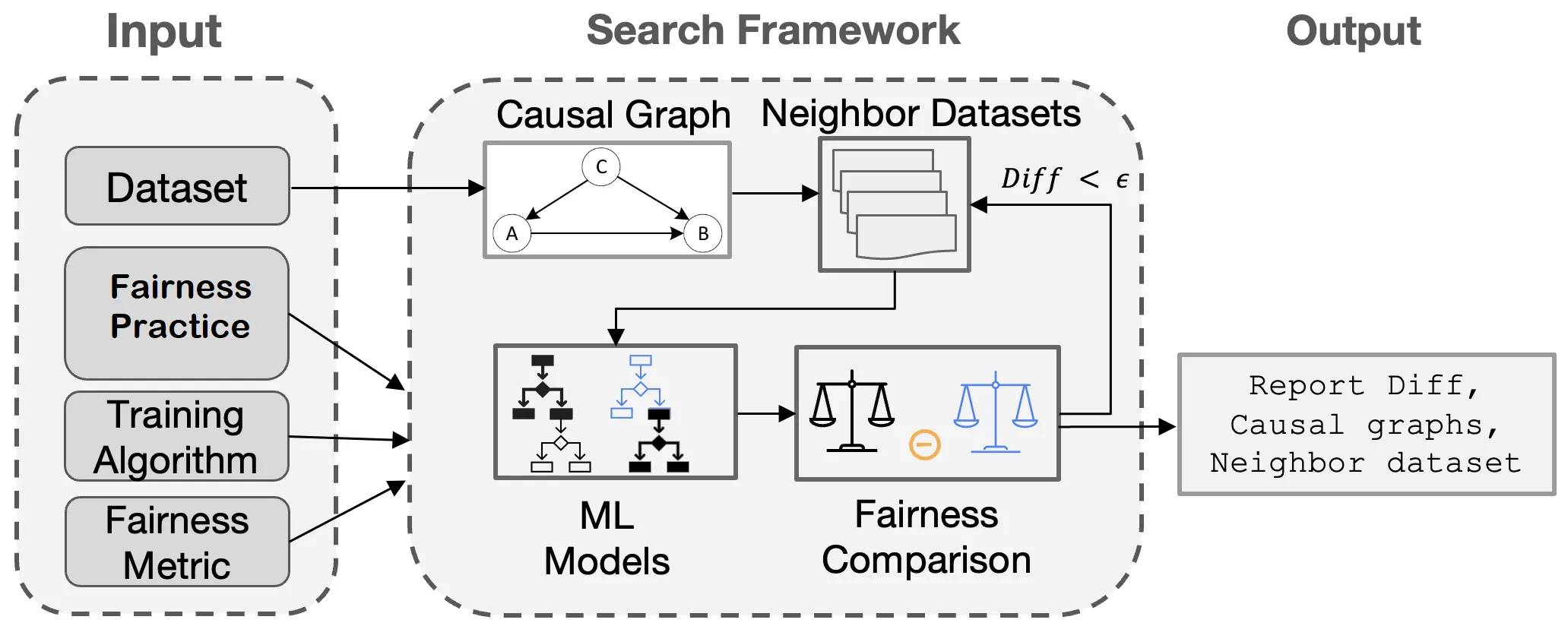

Machine learning (ML) algorithms are increasingly deployed to make critical decisions in socioeconomic applications such as finance, criminal justice, and autonomous driving. However, due to their data-driven and pattern-seeking nature, ML algorithms may develop decision logic that disproportionately distributes opportunities, benefits, resources, or information among different population groups, potentially harming marginalized communities. In response to such fairness concerns, the software engineering and ML communities have made significant efforts to establish the best practices for creating fair ML software. These include fairness interventions for training ML models, such as including sensitive features, selecting non-sensitive attributes, and applying bias mitigators. But how reliably can software professionals tasked with developing data-driven systems depend on these recommendations? And how well do these practices generalize in the presence of faulty labels, missing data, or distribution shifts? These questions form the core theme of this paper.

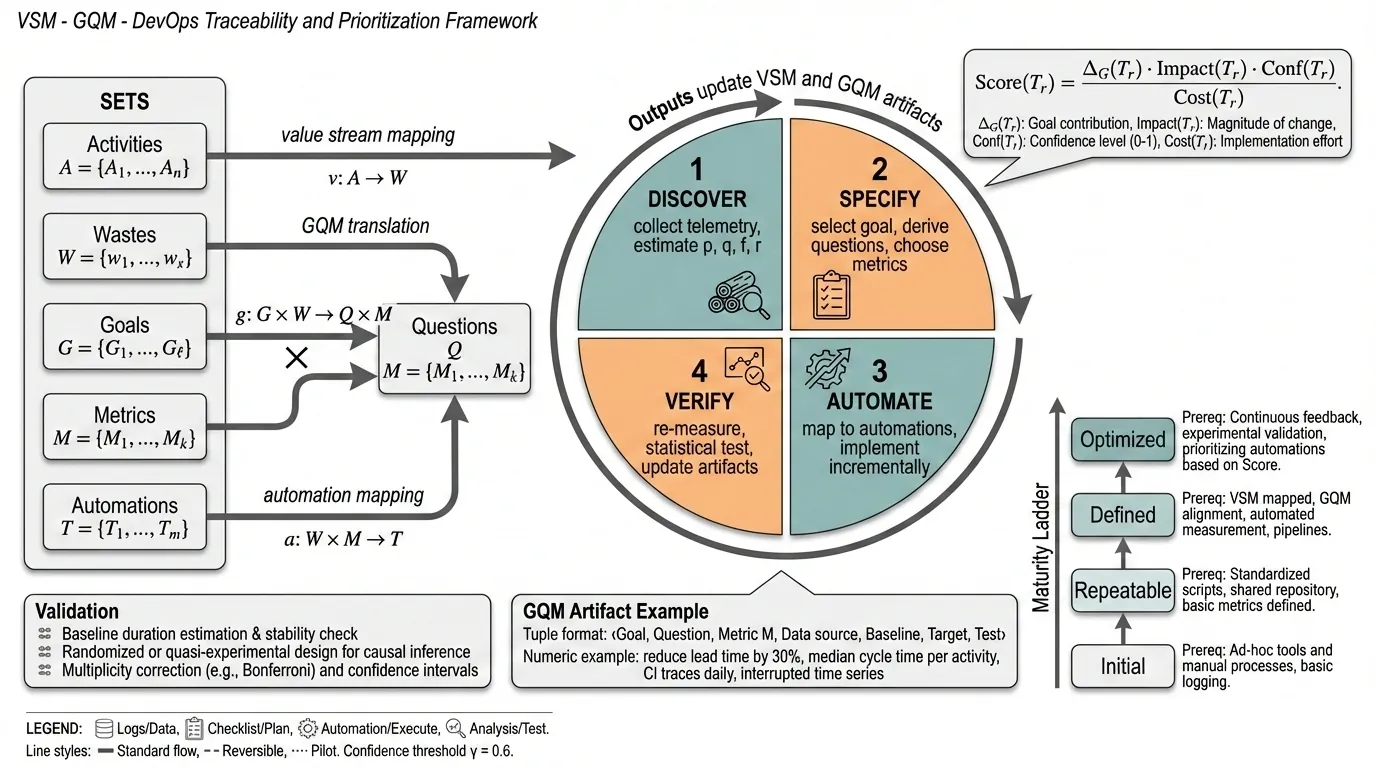

DevOps automation can accelerate software delivery, yet many organizations still struggle to justify and prioritize automation work in terms of strategic project-management outcomes such as waste reduction, delivery predictability, cross-team coordination, and customer-facing quality. This paper presents \textit{VSM--GQM--DevOps}, a unified, traceable framework that integrates (i) Value Stream Mapping (VSM) to visualize the end-to-end delivery system and quantify delays, rework, and handoffs, (ii) the Goal--Question--Metric (GQM) paradigm to translate stakeholder objectives into a minimal, decision-relevant measurement model (combining DORA with project and team outcomes), and (iii) maturity-aligned DevOps automation to remediate empirically observed bottlenecks through small, reversible interventions. The framework operationalizes traceability from observed waste to goal-aligned questions, metrics, and automation candidates, and provides a defensible prioritization approach that balances expected impact, confidence, and cost. We also define a multi-site, longitudinal mixed-method validation protocol that combines telemetry-based quasi-experimental analysis (interrupted time series and, where feasible, controlled rollouts) with qualitative triangulation from interviews and retrospectives. The expected contribution is a validated pathway and a set of practical instruments that enables organizations to select automation investments that demonstrably improve both delivery performance and project-management outcomes.

2601.03556

2601.03556Testing is a critical practice for ensuring software correctness and long-term maintainability. As agentic coding tools increasingly submit pull requests (PRs), it becomes essential to understand how testing appears in these agent-driven workflows. Using the AIDev dataset, we present an empirical study of test inclusion in agentic pull requests. We examine how often tests are included, when they are introduced during the PR lifecycle and how test-containing PRs differ from non-test PRs in terms of size, turnaround time, and merge outcomes. Across agents, test-containing PRs are more common over time and tend to be larger and take longer to complete, while merge rates remain largely similar. We also observe variation across agents in both test adoption and the balance between test and production code within test PRs. Our findings provide a descriptive view of testing behavior in agentic pull requests and offer empirical grounding for future studies of autonomous software development.

Open-source scientific software is abundant, yet most tools remain difficult to compile, configure, and reuse, sustaining a small-workshop mode of scientific computing. This deployment bottleneck limits reproducibility, large-scale evaluation, and the practical integration of scientific tools into modern AI-for-Science (AI4S) and agentic workflows. We present Deploy-Master, a one-stop agentic workflow for large-scale tool discovery, build specification inference, execution-based validation, and publication. Guided by a taxonomy spanning 90+ scientific and engineering domains, our discovery stage starts from a recall-oriented pool of over 500,000 public repositories and progressively filters it to 52,550 executable tool candidates under license- and quality-aware criteria. Deploy-Master transforms heterogeneous open-source repositories into runnable, containerized capabilities grounded in execution rather than documentation claims. In a single day, we performed 52,550 build attempts and constructed reproducible runtime environments for 50,112 scientific tools. Each successful tool is validated by a minimal executable command and registered in SciencePedia for search and reuse, enabling direct human use and optional agent-based invocation. Beyond delivering runnable tools, we report a deployment trace at the scale of 50,000 tools, characterizing throughput, cost profiles, failure surfaces, and specification uncertainty that become visible only at scale. These results explain why scientific software remains difficult to operationalize and motivate shared, observable execution substrates as a foundation for scalable AI4S and agentic science.