Data Structures and Algorithms

Covers data structures and analysis of algorithms.

Looking for a broader view? This category is part of:

Covers data structures and analysis of algorithms.

Looking for a broader view? This category is part of:

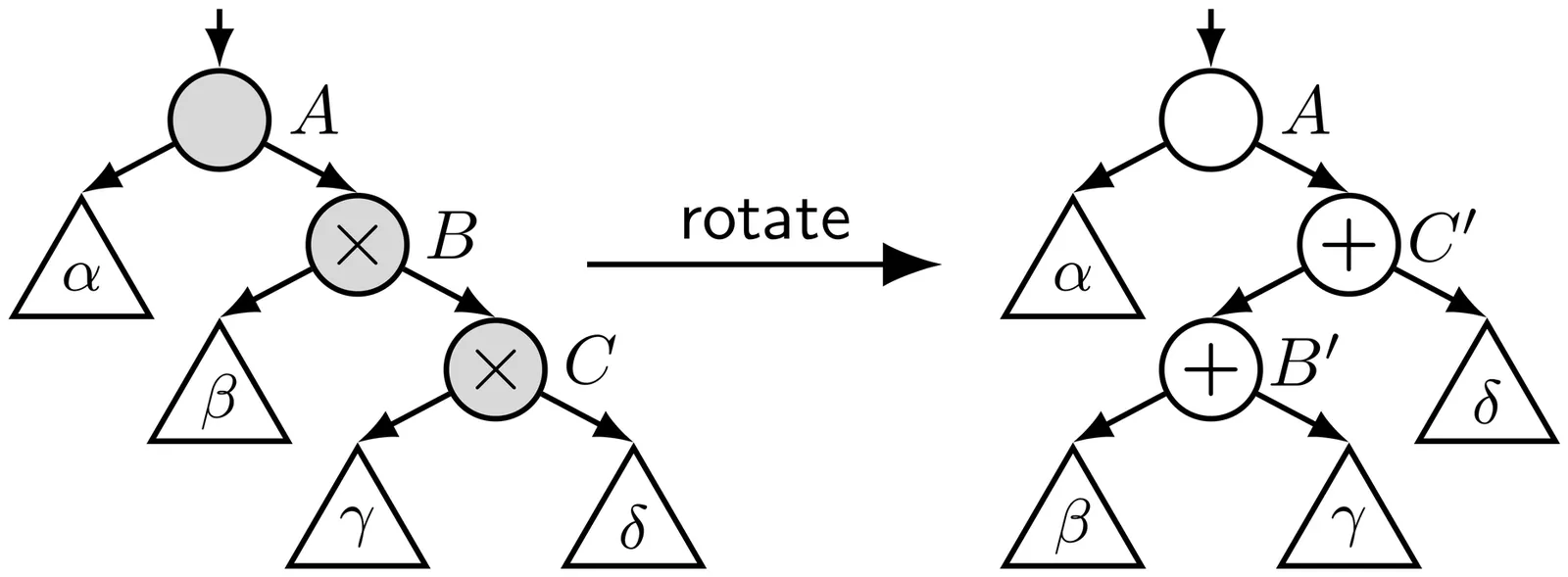

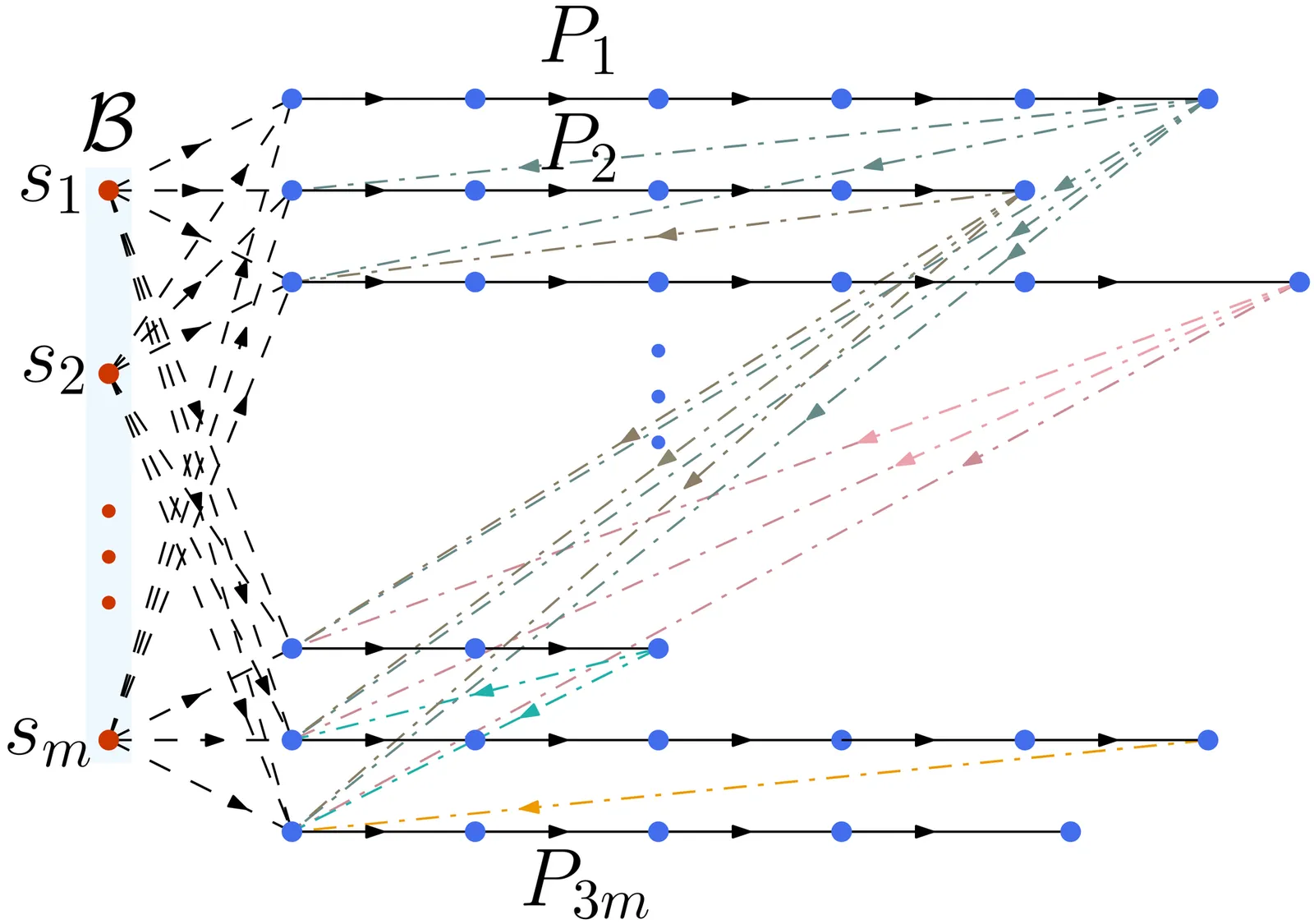

Augmentation makes search trees tremendously more versatile, allowing them to support efficient aggregation queries, order-statistic queries, and range queries in addition to insertion, deletion, and lookup. In this paper, we present the first lock-free augmented balanced search tree. Our algorithmic ideas build upon a recent augmented unbalanced search tree presented by Fatourou and Ruppert [DISC, 2024]. We implement both data structures, solving some memory reclamation challenges in the process, and provide an experimental performance analysis of them. We also present optimized versions of our balanced tree that use delegation to achieve better scalability and performance (by more than 2x in some workloads). Our experiments show that our augmented balanced tree is 2.2 to 30 times faster than the unbalanced augmented tree, and up to several orders of magnitude faster than unaugmented trees on 120 threads.

Detecting and counting copies of permutation patterns are fundamental algorithmic problems, with applications in the analysis of rankings, nonparametric statistics, and property testing tasks such as independence and quasirandomness testing. From an algorithmic perspective, there is a sharp difference in complexity between detecting and counting the copies of a given length-$k$ pattern in a length-$n$ permutation. The former admits a $2^{\mathcal{O}(k^2)} \cdot n$ time algorithm (Guillemot and Marx, 2014) while the latter cannot be solved in time $f(k)\cdot n^{o(k/\log k)}$ unless the Exponential Time Hypothesis (ETH) fails (Berendsohn, Kozma, and Marx, 2021). In fact already for patterns of length 4, exact counting is unlikely to admit near-linear time algorithms under standard fine-grained complexity assumptions (Dudek and Gawrychowski, 2020). Recently, Ben-Eliezer, Mitrović and Sristava (2026) showed that for patterns of length up to 5, a $(1+\varepsilon)$-approximation of the pattern count can be computed in near-linear time, yielding a separation between exact and approximate counting for small patterns, and conjectured that approximate counting is asymptotically easier than exact counting in general. We strongly refute their conjecture by showing that, under ETH, no algorithm running in time $f(k)\cdot n^{o(k/\log k)}$ can approximate the number of copies of a length-$k$ pattern within a multiplicative factor $n^{(1/2-\varepsilon)k}$. The lower bound on runtime matches the conditional lower bound for exact pattern counting, and the obtained bound on the multiplicative error factor is essentially tight, as an $n^{k/2}$-approximation can be computed in $2^{\mathcal{O}(k^2)}\cdot n$ time using an algorithm for pattern detection.

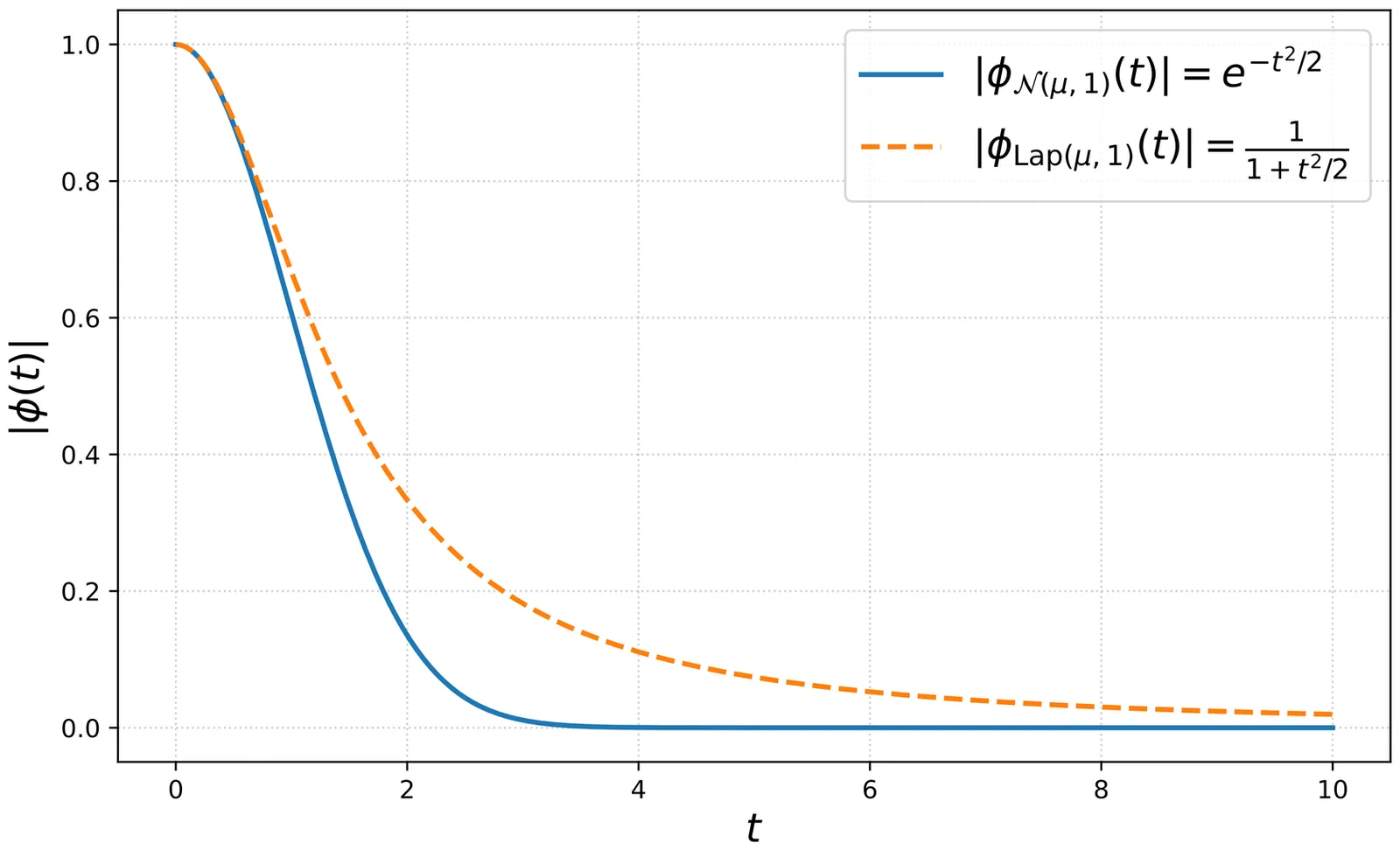

In this work, we give a ${\rm poly}(d,k)$ time and sample algorithm for efficiently learning the parameters of a mixture of $k$ spherical distributions in $d$ dimensions. Unlike all previous methods, our techniques apply to heavy-tailed distributions and include examples that do not even have finite covariances. Our method succeeds whenever the cluster distributions have a characteristic function with sufficiently heavy tails. Such distributions include the Laplace distribution but crucially exclude Gaussians. All previous methods for learning mixture models relied implicitly or explicitly on the low-degree moments. Even for the case of Laplace distributions, we prove that any such algorithm must use super-polynomially many samples. Our method thus adds to the short list of techniques that bypass the limitations of the method of moments. Somewhat surprisingly, our algorithm does not require any minimum separation between the cluster means. This is in stark contrast to spherical Gaussian mixtures where a minimum $\ell_2$-separation is provably necessary even information-theoretically [Regev and Vijayaraghavan '17]. Our methods compose well with existing techniques and allow obtaining ''best of both worlds" guarantees for mixtures where every component either has a heavy-tailed characteristic function or has a sub-Gaussian tail with a light-tailed characteristic function. Our algorithm is based on a new approach to learning mixture models via efficient high-dimensional sparse Fourier transforms. We believe that this method will find more applications to statistical estimation. As an example, we give an algorithm for consistent robust mean estimation against noise-oblivious adversaries, a model practically motivated by the literature on multiple hypothesis testing. It was formally proposed in a recent Master's thesis by one of the authors, and has already inspired follow-up works.

2601.05044

2601.05044Assuming that P is not equal to NP, the worst-case run time of any algorithm solving an NP-complete problem must be super-polynomial. But what is the fastest run time we can get? Before one can even hope to approach this question, a more provocative question presents itself: Since for many problems the naive brute-force baseline algorithms are still the fastest ones, maybe their run times are already optimal? The area that we call in this survey "fine-grained complexity of NP-complete problems" studies exactly this question. We invite the reader to catch up on selected classic results as well as delve into exciting recent developments in a riveting tour through the area passing by (among others) algebra, complexity theory, extremal and additive combinatorics, cryptography, and, of course, last but not least, algorithm design.

2601.04756

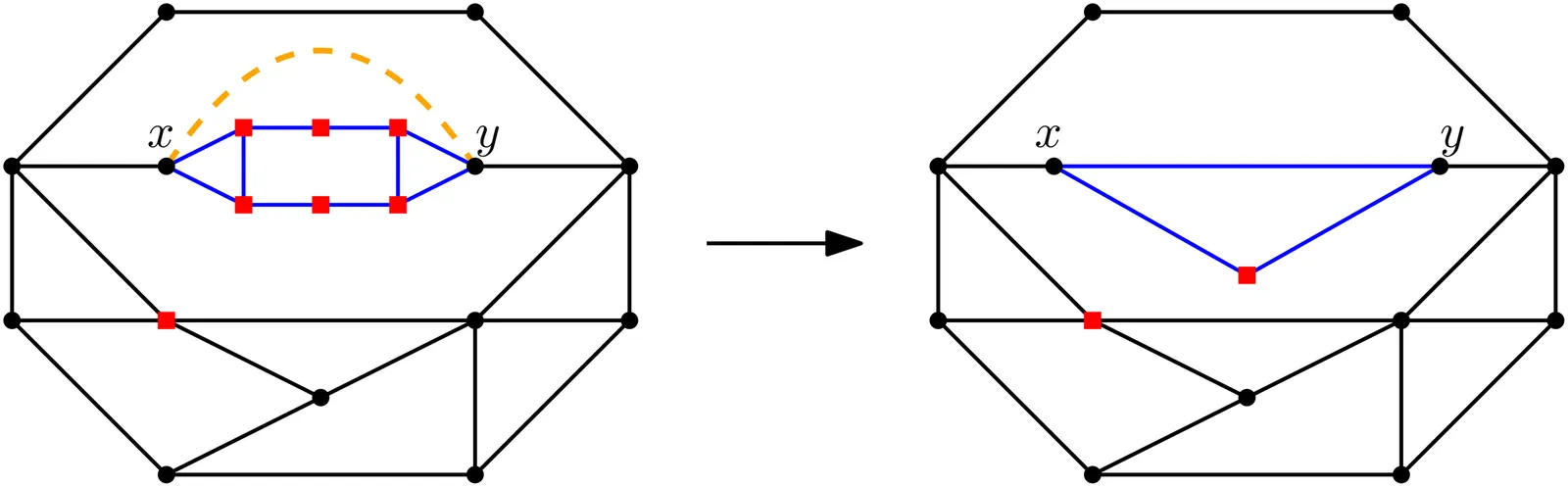

2601.04756A connectivity function on a finite set $V$ is a symmetric submodular function $f \colon 2^V \to \mathbb{Z}$ with $f(\emptyset)=0$. We prove that finding a branch-decomposition of width at most $k$ for a connectivity function given by an oracle is fixed-parameter tractable (FPT), by providing an algorithm of running time $2^{O(k^2)} γn^6 \log n$, where $γ$ is the time to compute $f(X)$ for any set $X$, and $n = |V|$. This improves the previous algorithm by Oum and Seymour [J. Combin. Theory Ser.~B, 2007], which runs in time $γn^{O(k)}$. Our algorithm can be applied to rank-width of graphs, branch-width of matroids, branch-width of (hyper)graphs, and carving-width of graphs. This resolves an open problem asked by Hliněný [SIAM J. Comput., 2005], who asked whether branch-width of matroids given by the rank oracle is fixed-parameter tractable. Furthermore, our algorithm improves the best known dependency on $k$ in the running times of FPT algorithms for graph branch-width, rank-width, and carving-width.

In this paper, we study the dominating set problem in \emph{RDV graphs}, a graph class that lies between interval graphs and chordal graphs and is defined as the \textbf{v}ertex-intersection graphs of \textbf{d}ownward paths in a \textbf{r}ooted tree. It was shown in a previous paper that adjacency queries in an RDV graph can be reduced to the question whether a horizontal segment intersects a vertical segment. This was then used to find a maximum matching in an $n$-vertex RDV graph, using priority search trees, in $O(n\log n)$ time, i.e., without even looking at all edges. In this paper, we show that if additionally we also use a ray shooting data structure, we can also find a minimum dominating set in an RDV graph $O(n\log n)$ time (presuming a linear-sized representation of the graph is given). The same idea can also be used for a new proof to find a minimum dominating set in an interval graph in $O(n)$ time.

A Multinomial Logit (MNL) model is composed of a finite universe of items $[n]=\{1,..., n\}$, each assigned a positive weight. A query specifies an admissible subset -- called a slate -- and the model chooses one item from that slate with probability proportional to its weight. This query model is also known as the Plackett-Luce model or conditional sampling oracle in the literature. Although MNLs have been studied extensively, a basic computational question remains open: given query access to slates, how efficiently can we learn weights so that, for every slate, the induced choice distribution is within total variation distance $\varepsilon$ of the ground truth? This question is central to MNL learning and has direct implications for modern recommender system interfaces. We provide two algorithms for this task, one with adaptive queries and one with non-adaptive queries. Each algorithm outputs an MNL $M'$ that induces, for each slate $S$, a distribution $M'_S$ on $S$ that is within $\varepsilon$ total variation distance of the true distribution. Our adaptive algorithm makes $O\left(\frac{n}{\varepsilon^{3}}\log n\right)$ queries, while our non-adaptive algorithm makes $O\left(\frac{n^{2}}{\varepsilon^{3}}\log n \log\frac{n}{\varepsilon}\right)$ queries. Both algorithms query only slates of size two and run in time proportional to their query complexity. We complement these upper bounds with lower bounds of $Ω\left(\frac{n}{\varepsilon^{2}}\log n\right)$ for adaptive queries and $Ω\left(\frac{n^{2}}{\varepsilon^{2}}\log n\right)$ for non-adaptive queries, thus proving that our adaptive algorithm is optimal in its dependence on the support size $n$, while the non-adaptive one is tight within a $\log n$ factor.

Given a planar graph, a subset of its vertices called terminals, and $k \in \mathbb{N}$, the Face Cover Number problem asks whether the terminals lie on the boundaries of at most $k$ faces of some embedding of the input graph. When a plane graph is given in the input, the problem is known to have a polynomial kernel~\cite{GarneroST17}. In this paper, we present the first polynomial kernel for Face Cover Number when the input is a planar graph (without a fixed embedding). Our approach overcomes the challenge of not having a predefined set of face boundaries by building a kernel bottom-up on an SPR-tree while preserving the essential properties of the face cover along the way.

To achieve fast recovery from link failures, most modern communication networks feature fully decentralized fast re-routing mechanisms. These re-routing mechanisms rely on pre-installed static re-routing rules at the nodes (the routers), which depend only on local failure information, namely on the failed links incident to the node. Ideally, a network is perfectly resilient: the re-routing rules ensure that packets are always successfully routed to their destinations as long as the source and the destination are still physically connected in the underlying network after the failures. Unfortunately, there are examples where achieving perfect resilience is not possible. Surprisingly, only very little is known about the algorithmic aspect of when and how perfect resilience can be achieved. We investigate the computational complexity of analyzing such local fast re-routing mechanisms. Our main result is a negative one: we show that even checking whether a given set of static re-routing rules ensures perfect resilience is coNP-complete. We also show coNP-completeness of the so-called ideal resilience, a weaker notion of resilience often considered in the literature. Additionally, we investigate other fundamental variations of the problem. In particular, we show that our coNP-completeness proof also applies to scenarios where the re-routing rules have specific patterns (known as skipping in the literature). On the positive side, for scenarios where nodes do not have information about the link from which a packet arrived (the so-called in-port), we present linear-time algorithms for both the verification and synthesis problem for perfect resilience.

2601.03643

2601.03643A $k$-connectivity oracle for a graph $G=(V,E)$ is a data structure that given $s,t \in V$ determines whether there are at least $k+1$ internally disjoint $st$-paths in $G$. For undirected graphs, Pettie, Saranurak & Yin [STOC 2022, pp. 151-161] proved that any $k$-connectivity oracle requires $Ω(kn)$ bits of space. They asked whether $Ω(kn)$ bits are still necessary if $G$ is $k$-connected. We will show by a very simple proof that this is so even if $G$ is $k$-connected, answering this open question.

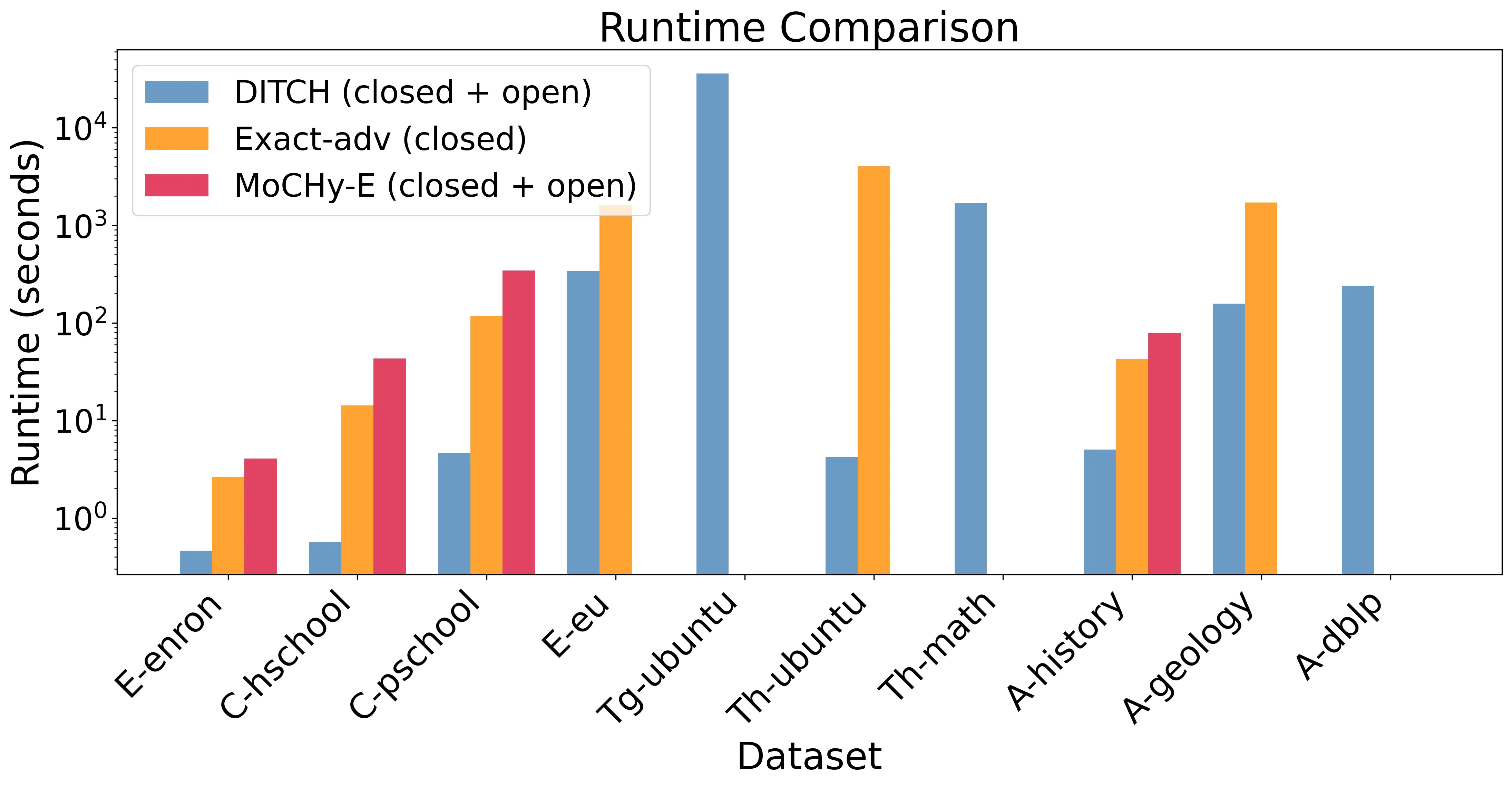

Counting the number of small patterns is a central task in network analysis. While this problem is well studied for graphs, many real-world datasets are naturally modeled as hypergraphs, motivating the need for efficient hypergraph motif counting algorithms. In particular, we study the problem of counting hypertriangles - collections of three pairwise-intersecting hyperedges. These hypergraph patterns have a rich structure with multiple distinct intersection patterns unlike graph triangles. Inspired by classical graph algorithms based on orientations and degeneracy, we develop a theoretical framework that generalizes these concepts to hypergraphs and yields provable algorithms for hypertriangle counting. We implement these ideas in DITCH (Degeneracy Inspired Triangle Counter for Hypergraphs) and show experimentally that it is 10-100x faster and more memory efficient than existing state-of-the-art methods.

We study $τ$-Bounded-Density Edge Deletion ($τ$-BDED), where given an undirected graph $G$, the task is to remove as few edges as possible to obtain a graph $G'$ where no subgraph of $G'$ has density more than $τ$. The density of a (sub)graph is the number of edges divided by the number of vertices. This problem was recently introduced and shown to be NP-hard for $τ\in \{2/3, 3/4, 1 + 1/25\}$, but polynomial-time solvable for $τ\in \{0,1/2,1\}$ [Bazgan et al., JCSS 2025]. We provide a complete dichotomy with respect to the target density $τ$: 1. If $2τ\in \mathbb{N}$ (half-integral target density) or $τ< 2/3$, then $τ$-BDED is polynomial-time solvable. 2. Otherwise, $τ$-BDED is NP-hard. We complement the NP-hardness with fixed-parameter tractability with respect to the treewidth of $G$. Moreover, for integral target density $τ\in \mathbb{N}$, we show $τ$-BDED to be solvable in randomized $O(m^{1 + o(1)})$ time. Our algorithmic results are based on a reduction to a new general flow problem on restricted networks that, depending on $τ$, can be solved via Maximum s-t-Flow or General Factors. We believe this connection between these variants of flow and matching to be of independent interest.

2601.03020

2601.03020The regular expression matching problem asks whether a given regular expression of length $m$ matches a given string of length $n$. As is well known, the problem can be solved in $O(nm)$ time using Thompson's algorithm. Moreover, recent studies have shown that the matching problem for regular expressions extended with a practical extension called lookaround can be solved in the same time complexity. In this work, we consider three well-known extensions to regular expressions called backreference, intersection and complement, and we show that, unlike in the case of lookaround, the matching problem for regular expressions extended with any of the three (for backreference, even when restricted to one capturing group) cannot be solved in $O(n^{2-\varepsilon} \mathrm{poly}(m))$ time for any constant $\varepsilon > 0$ under the Orthogonal Vectors Conjecture. Moreover, we study the matching problem for regular expressions extended with complement in more detail, which is also known as extended regular expression (ERE) matching. We show that there is no ERE matching algorithm that runs in $O(n^{ω-\varepsilon} \mathrm{poly}(m))$ time ($2 \le ω< 2.3716$ is the exponent of square matrix multiplication) for any constant $\varepsilon > 0$ under the $k$-Clique Hypothesis, and there is no combinatorial ERE matching algorithm that runs in $O(n^{3-\varepsilon} \mathrm{poly}(m))$ time for any constant $\varepsilon > 0$ under the Combinatorial $k$-Clique Hypothesis. This shows that the $O(n^3 m)$-time algorithm introduced by Hopcroft and Ullman in 1979 and recently improved by Bille et al. to run in $O(n^ωm)$ time using fast matrix multiplication was already optimal in a sense, and sheds light on why the theoretical computer science community has struggled to improve the time complexity of ERE matching with respect to $n$ and $m$ for more than 45 years.

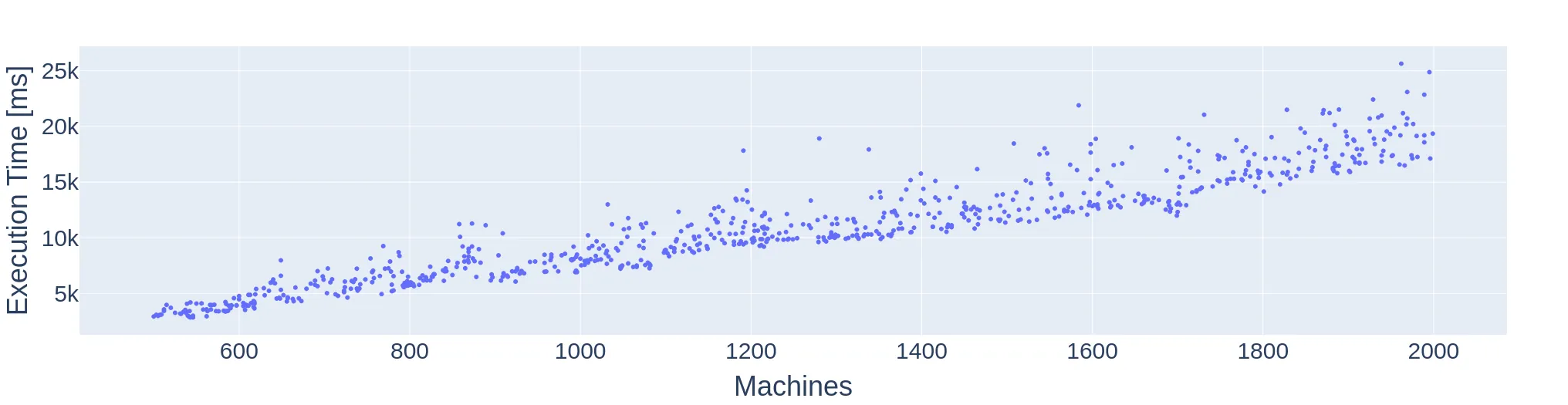

In moldable job scheduling, we are provided $m$ identical machines and $n$ jobs that can be executed on a variable number of machines. The execution time of each job depends on the number of machines assigned to execute that job. For the specific problem of monotone moldable job scheduling, jobs are assumed to have a processing time that is non-increasing in the number of machines. The previous best-known algorithms are: (1) a polynomial-time approximation scheme with time complexity $Ω(n^{g(1/\varepsilon)})$, where $g(\cdot)$ is a super-exponential function [Jansen and Thöle '08; Jansen and Land '18], (2) a fully polynomial approximation scheme for the case of $m \geq 8\frac{n}{\varepsilon}$ [Jansen and Land '18], and (3) a $\frac{3}{2}$ approximation with time complexity $O(nm\log(mn))$ [Wu, Zhang, and Chen '23]. We present a new practically efficient algorithm with an approximation ratio of $\approx (1.4593 + \varepsilon)$ and a time complexity of $O(nm \log \frac{1}{\varepsilon})$. Our result also applies to the contiguous variant of the problem. In addition to our theoretical results, we implement the presented algorithm and show that the practical performance is significantly better than the theoretical worst-case approximation ratio.

We study the parameterized complexity of the Cograph Deletion problem, which asks whether one can delete at most $k$ edges from a graph to make it $P_4$-free. This is a well-known graph modification problem with applications in computation biology and social network analysis. All current parameterized algorithms use a similar strategy, which is to find a $P_4$ and explore the local structure around it to perform an efficient recursive branching. The best known algorithm achieves running time $O^*(2.303^k)$ and requires an automated search of the branching cases due to their complexity. Since it appears difficult to further improve the current strategy, we devise a new approach using modular decompositions. We solve each module and the quotient graph independently, with the latter being the core problem. This reduces the problem to solving on a prime graph, in which all modules are trivial. We then use a characterization of Chudnovsky et al. stating that any large enough prime graph has one of seven structures as an induced subgraph. These all have many $P_4$s, with the quantity growing linearly with the graph size, and we show that these allow a recursive branch tree algorithm to achieve running time $O^*((2 + ε)^k)$ for any $ε> 0$. This appears to be the first algorithmic application of the prime graph characterization and it could be applicable to other modification problems. Towards this goal, we provide the exact set of graph classes $\H$ for which the $\H$-free editing problem can make use of our reduction to a prime graph, opening the door to improvements for other modification problems.

We introduce the first iterative algorithm for constructing a $\varepsilon$-coreset that guarantees deterministic $\ell_p$ subspace embedding for any $p \in [1,\infty)$ and any $\varepsilon > 0$. For a given full rank matrix $\mathbf{X} \in \mathbb{R}^{n \times d}$ where $n \gg d$, $\mathbf{X}' \in \mathbb{R}^{m \times d}$ is an $(\varepsilon,\ell_p)$-subspace embedding of $\mathbf{X}$, if for every $\mathbf{q} \in \mathbb{R}^d$, $(1-\varepsilon)\|\mathbf{Xq}\|_{p}^{p} \leq \|\mathbf{X'q}\|_{p}^{p} \leq (1+\varepsilon)\|\mathbf{Xq}\|_{p}^{p}$. Specifically, in this paper, $\mathbf{X}'$ is a weighted subset of rows of $\mathbf{X}$ which is commonly known in the literature as a coreset. In every iteration, the algorithm ensures that the loss on the maintained set is upper and lower bounded by the loss on the original dataset with appropriate scalings. So, unlike typical coreset guarantees, due to bounded loss, our coreset gives a deterministic guarantee for the $\ell_p$ subspace embedding. For an error parameter $\varepsilon$, our algorithm takes $O(\mathrm{poly}(n,d,\varepsilon^{-1}))$ time and returns a deterministic $\varepsilon$-coreset, for $\ell_p$ subspace embedding whose size is $O\left(\frac{d^{\max\{1,p/2\}}}{\varepsilon^{2}}\right)$. Here, we remove the $\log$ factors in the coreset size, which had been a long-standing open problem. Our coresets are optimal as they are tight with the lower bound. As an application, our coreset can also be used for approximately solving the $\ell_p$ regression problem in a deterministic manner.

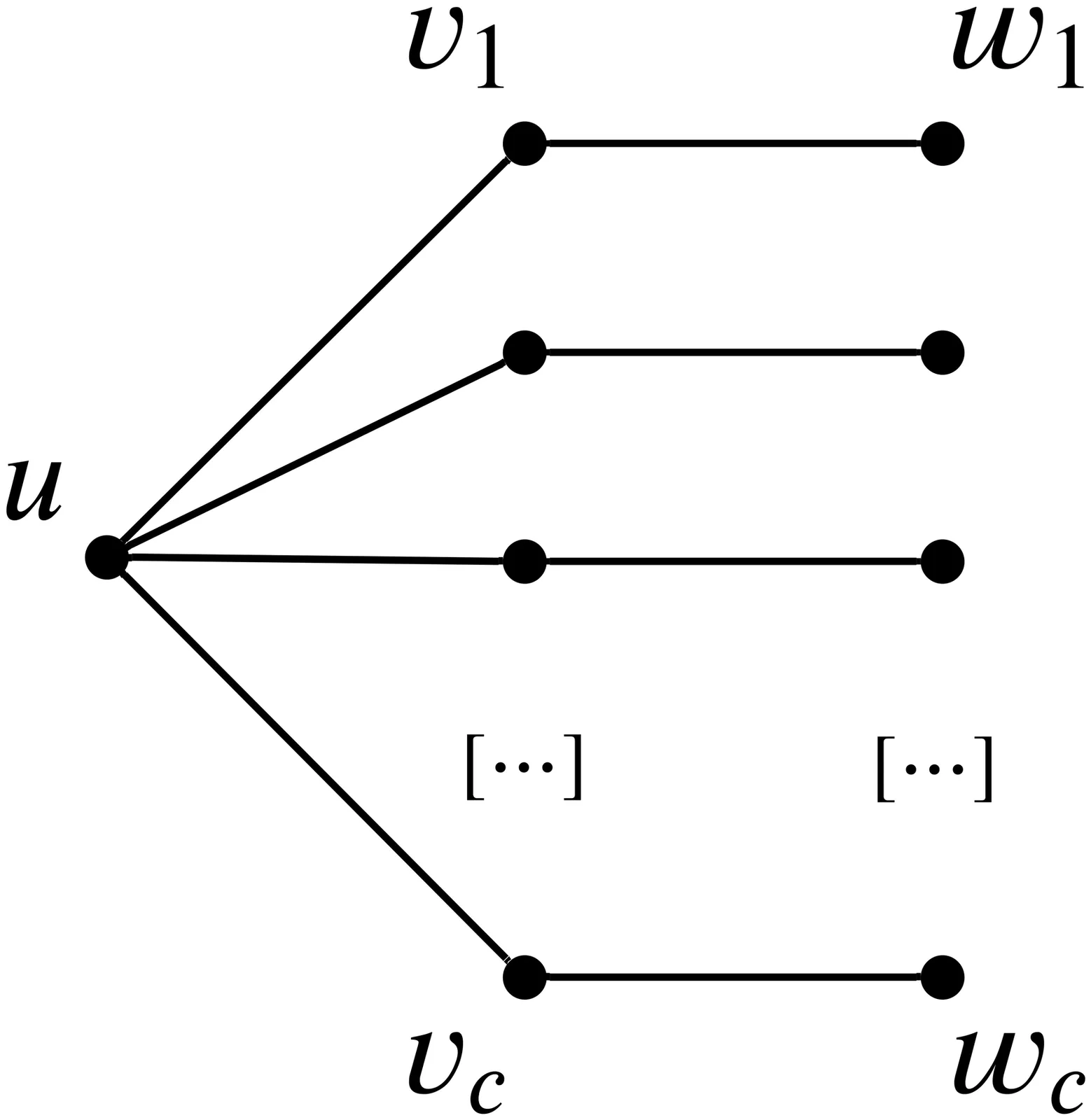

The kidney exchange mechanism allows many patient-donor pairs who are otherwise incompatible with each other to come together and exchange kidneys along a cycle. However, due to infrastructure and legal constraints, kidney exchange can only be performed in small cycles in practice. In reality, there are also some altruistic donors who do not have any paired patients. This allows us to also perform kidney exchange along paths that start from some altruistic donor. Unfortunately, the computational task is NP-complete. To overcome this computational barrier, an important line of research focuses on designing faster algorithms, both exact and using the framework of parameterized complexity. The standard parameter for the kidney exchange problem is the number $t$ of patients that receive a healthy kidney. The current fastest known deterministic FPT algorithm for this problem, parameterized by $t$, is $O^\star\left(14^t\right)$. In this work, we improve this by presenting a deterministic FPT algorithm that runs in time $O^\star\left((4e)^t\right)\approx O^\star\left(10.88^t\right)$. This problem is also known to be W[1]-hard parameterized by the treewidth of the underlying undirected graph. A natural question here is whether the kidney exchange problem admits an FPT algorithm parameterized by the pathwidth of the underlying undirected graph. We answer this negatively in this paper by proving that this problem is W[1]-hard parameterized by the pathwidth of the underlying undirected graph. We also present some parameterized intractability results improving the current understanding of the problem under the framework of parameterized complexity.

Subgraph complementation is an operation that toggles all adjacencies inside a selected vertex set. Given a graph \(G\) and a target class \(\mathcal{C}\), the Minimum Subgraph Complementation problem asks for a minimum-size vertex set \(S\) such that complementing the subgraph induced by \(S\) transforms \(G\) into a graph belonging to \(\mathcal{C}\). While the decision version of Subgraph Complementation has been extensively studied and is NP-complete for many graph classes, the algorithmic complexity of its optimization variant has remained largely unexplored. In this paper, we study MSC from an algorithmic perspective. We present polynomial-time algorithms for MSC in several nontrivial settings. Our results include polynomial-time solvability for transforming graphs between bipartite, co-bipartite, and split graphs, as well as for complementing bipartite regular graphs into chordal graphs. We also show that MSC to the class of graphs of fixed degeneracy can be solved in polynomial time when the input graph is a forest. Moreover, we investigate MSC with respect to connectivity and prove that MSC to the class of disconnected graphs and to the class of 2-connected graphs can be solved in polynomial time for arbitrary inputs.

Recently, Lafond and Luo [MFCS 2023] defined the $\mathcal{G}$-modular cardinality of a graph $G$ as the minimum size of a partition of $V(G)$ into modules that belong to a graph class $\mathcal{G}$. We analyze the complexity of calculating parameters that generalize interval graphs when parameterized by the $\mathcal{G}$-modular cardinality, where $\mathcal{G}$ corresponds either to the class of interval graphs or to the union of complete graphs. Namely, we analyze the complexity of computing the thinness and the simultaneous interval number of a graph. We present a linear kernel for the Thinness problem parameterized by the interval-modular cardinality and an FPT algorithm for Simultaneous Interval Number when parameterized by the cluster-modular cardinality plus the solution size. The interval-modular cardinality of a graph is not greater than the cluster-modular cardinality, which in turn generalizes the neighborhood diversity and the twin-cover number. Thus, our results imply a linear kernel for Thinness when parameterized by the neighborhood diversity of the input graph, FPT algorithms for Thinness when parameterized by the twin-cover number and vertex cover number, and FPT algorithms for Simultaneous Interval Number when parameterized by the neighborhood diversity plus the solution size, twin-cover number, and vertex cover number. To the best of our knowledge, prior to our work no parameterized algorithms (FPT or XP) for computing the thinness or the simultaneous interval number were known. On the negative side, we observe that Thinness and Simultaneous Interval Number parameterized by treewidth, pathwidth, bandwidth, (linear) mim-width, clique-width, modular-width, or even the thinness or simultaneous interval number themselves, admit no polynomial kernels assuming NP $\not\subseteq$ coNP/poly.

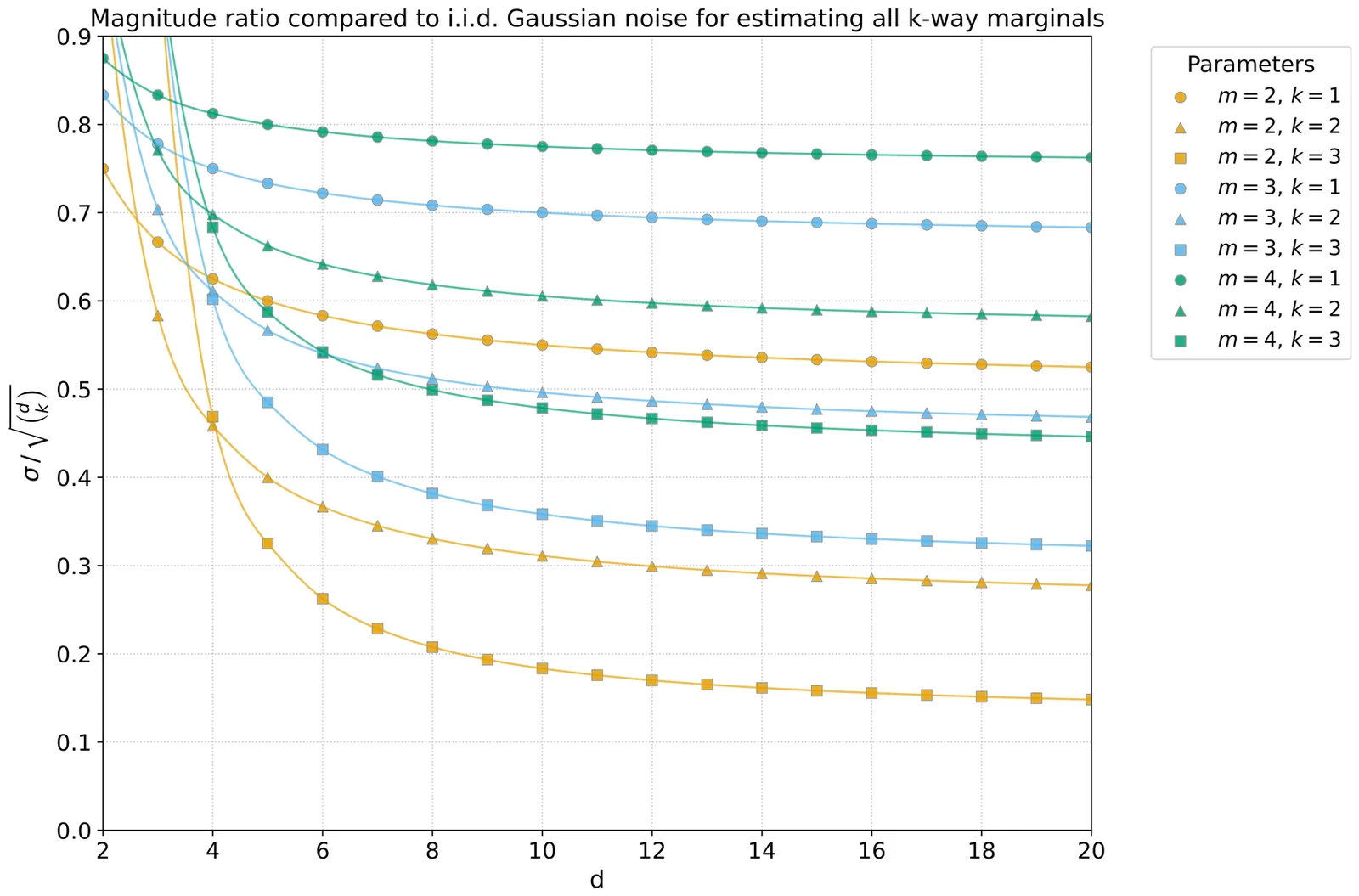

We revisit the task of releasing marginal queries under differential privacy with additive (correlated) Gaussian noise. We first give a construction for answering arbitrary workloads of weighted marginal queries, over arbitrary domains. Our technique is based on releasing queries in the Fourier basis with independent noise with carefully calibrated variances, and reconstructing the marginal query answers using the inverse Fourier transform. We show that our algorithm, which is a factorization mechanism, is exactly optimal among all factorization mechanisms, both for minimizing the sum of weighted noise variances, and for minimizing the maximum noise variance. Unlike algorithms based on optimizing over all factorization mechanisms via semidefinite programming, our mechanism runs in time polynomial in the dataset and the output size. This construction recovers results of Xiao et al. [Neurips 2023] with a simpler algorithm and optimality proof, and a better running time. We then extend our approach to a generalization of marginals which we refer to as product queries. We show that our algorithm is still exactly optimal for this more general class of queries. Finally, we show how to embed extended marginal queries, which allow using a threshold predicate on numerical attributes, into product queries. We show that our mechanism is almost optimal among all factorization mechanisms for extended marginals, in the sense that it achieves the optimal (maximum or average) noise variance up to lower order terms.