High Energy Physics - Experiment

Results from current and past experiments at particle accelerators, detector physics.

Looking for a broader view? This category is part of:

Results from current and past experiments at particle accelerators, detector physics.

Looking for a broader view? This category is part of:

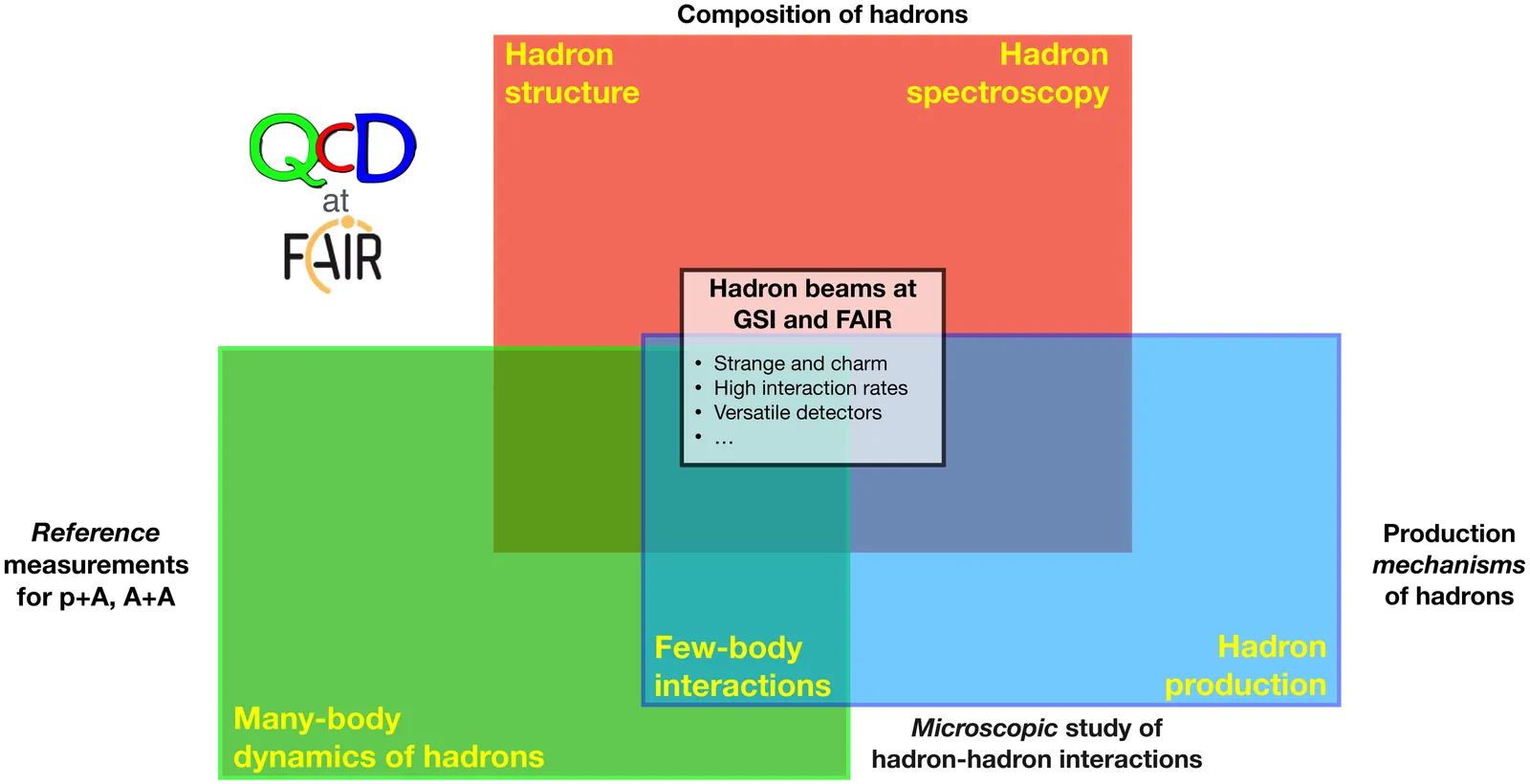

This White Paper outlines a coordinated, decade-spanning programme of hadron and QCD studies anchored at the GSI/FAIR accelerator complex. Profiting from intense deuteron, proton and pion beams coupled with high-rate capable detectors and an international theory effort, the initiative addresses fundamental questions related to the strong interaction featuring confinement and dynamical mass generation. This includes our understanding of hadron-hadron interactions and the composition of hadrons through mapping the baryon and meson spectra, including exotic states, and quantifying hadron structure. This interdisciplinary research connects topics in the fields of nuclear, heavy-ion, and (nuclear) astro (particle) physics, linking, for example, terrestrial data to constraints on neutron star structure. A phased roadmap with SIS100 accelerator start-up and envisaged detector upgrades will yield precision cross sections, transition form factors, in-medium spectral functions, and validated theory inputs. Synergies with external programmes at international accelerator facilities worldwide are anticipated. The programme is expected to deliver decisive advances in our understanding of non-perturbative (strong) QCD and astrophysics, and high-rate detector and data-science technology.

2512.15230

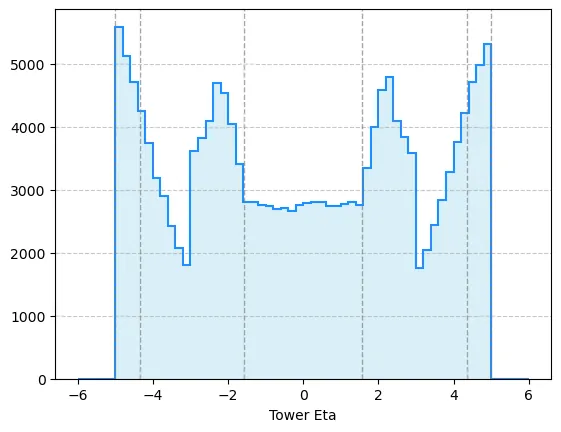

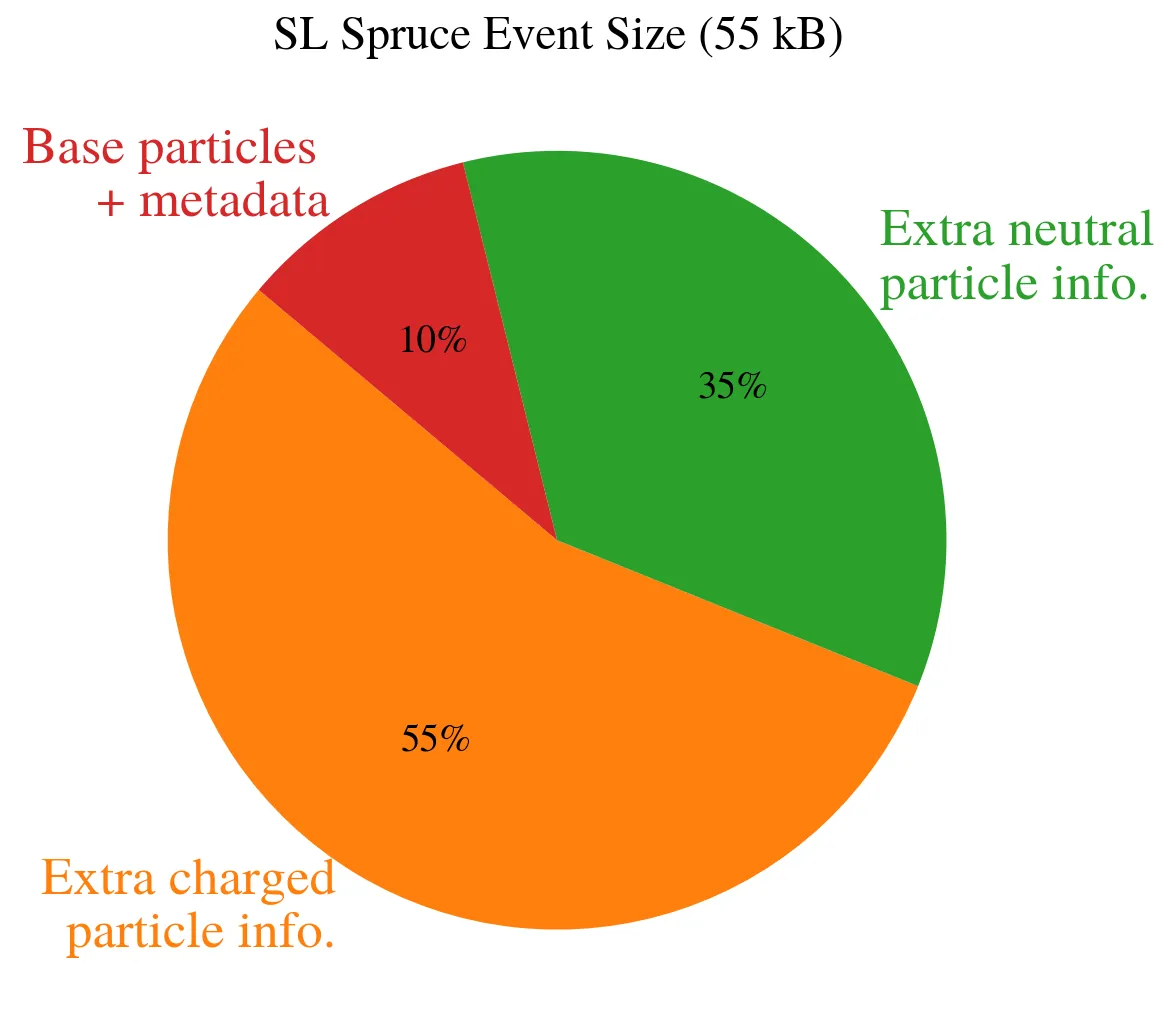

2512.15230We introduce ColliderML - a large, open, experiment-agnostic dataset of fully simulated and digitised proton-proton collisions in High-Luminosity Large Hadron Collider conditions ($\sqrt{s}=14$ TeV, mean pile-up $μ= 200$). ColliderML provides one million events across ten Standard Model and Beyond Standard Model processes, plus extensive single-particle samples, all produced with modern next-to-leading order matrix element calculation and showering, realistic per-event pile-up overlay, a validated OpenDataDetector geometry, and standard reconstructions. The release fills a major gap for machine learning (ML) research on detector-level data, provided on the ML-friendly Hugging Face platform. We present physics coverage and the generation, simulation, digitisation and reconstruction pipeline, describe format and access, and initial collider physics benchmarks.

Multiple proton-proton collisions (pile-up) occur at every bunch crossing at the LHC, with the mean number of interactions expected to reach 80 during Run 3 and up to 200 at the High-Luminosity LHC. As a direct consequence, events with multijet signatures will occur at increasingly high rates. To cope with the increased luminosity, being able to efficiently group jets according to their origin along the beamline is crucial, particularly at the trigger level. In this work, a novel uncertainty-aware jet regression model based on a Deep Sets architecture is introduced, DIPz, to regress on a jet origin position along the beamline. The inputs to the DIPz algorithm are the charged particle tracks associated to each jet. An event-level discriminant, the Maximum Log Product of Likelihoods (MLPL), is constructed by combining the DIPz per-jet predictions. MLPL is cut-optimized to select events compatible with targeted multi-jet signature selection. This combined approach provides a robust and computationally efficient method for pile-up rejection in multi-jet final states, applicable to real-time event selections at the ATLAS High Level Trigger.

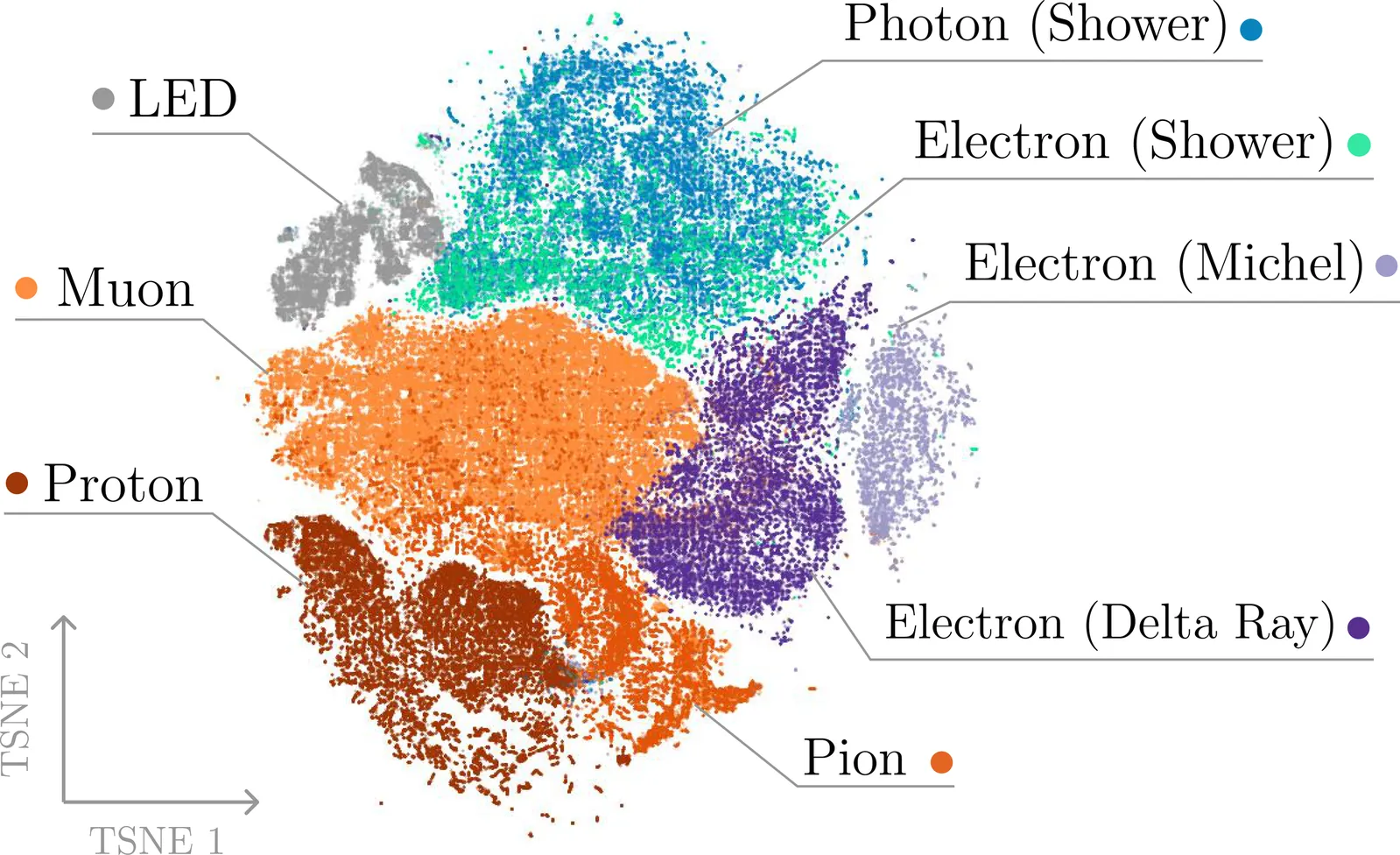

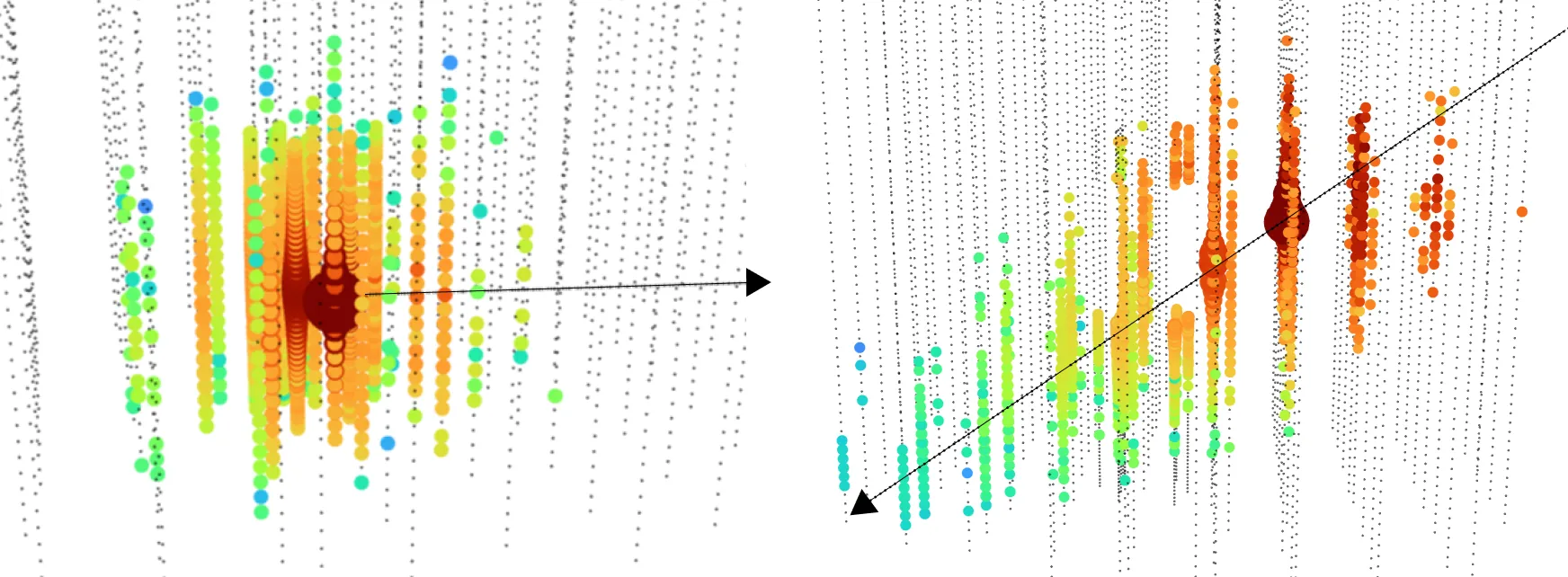

Liquid argon time projection chambers (LArTPCs) provide dense, high-fidelity 3D measurements of particle interactions and underpin current and future neutrino and rare-event experiments. Physics reconstruction typically relies on complex detector-specific pipelines that use tens of hand-engineered pattern recognition algorithms or cascades of task-specific neural networks that require extensive, labeled simulation that requires a careful, time-consuming calibration process. We introduce \textbf{Panda}, a model that learns reusable sensor-level representations directly from raw unlabeled LArTPC data. Panda couples a hierarchical sparse 3D encoder with a multi-view, prototype-based self-distillation objective. On a simulated dataset, Panda substantially improves label efficiency and reconstruction quality, beating the previous state-of-the-art semantic segmentation model with 1,000$\times$ fewer labels. We also show that a single set-prediction head 1/20th the size of the backbone with no physical priors trained on frozen outputs from Panda can result in particle identification that is comparable with state-of-the-art (SOTA) reconstruction tools. Full fine-tuning further improves performance across all tasks.

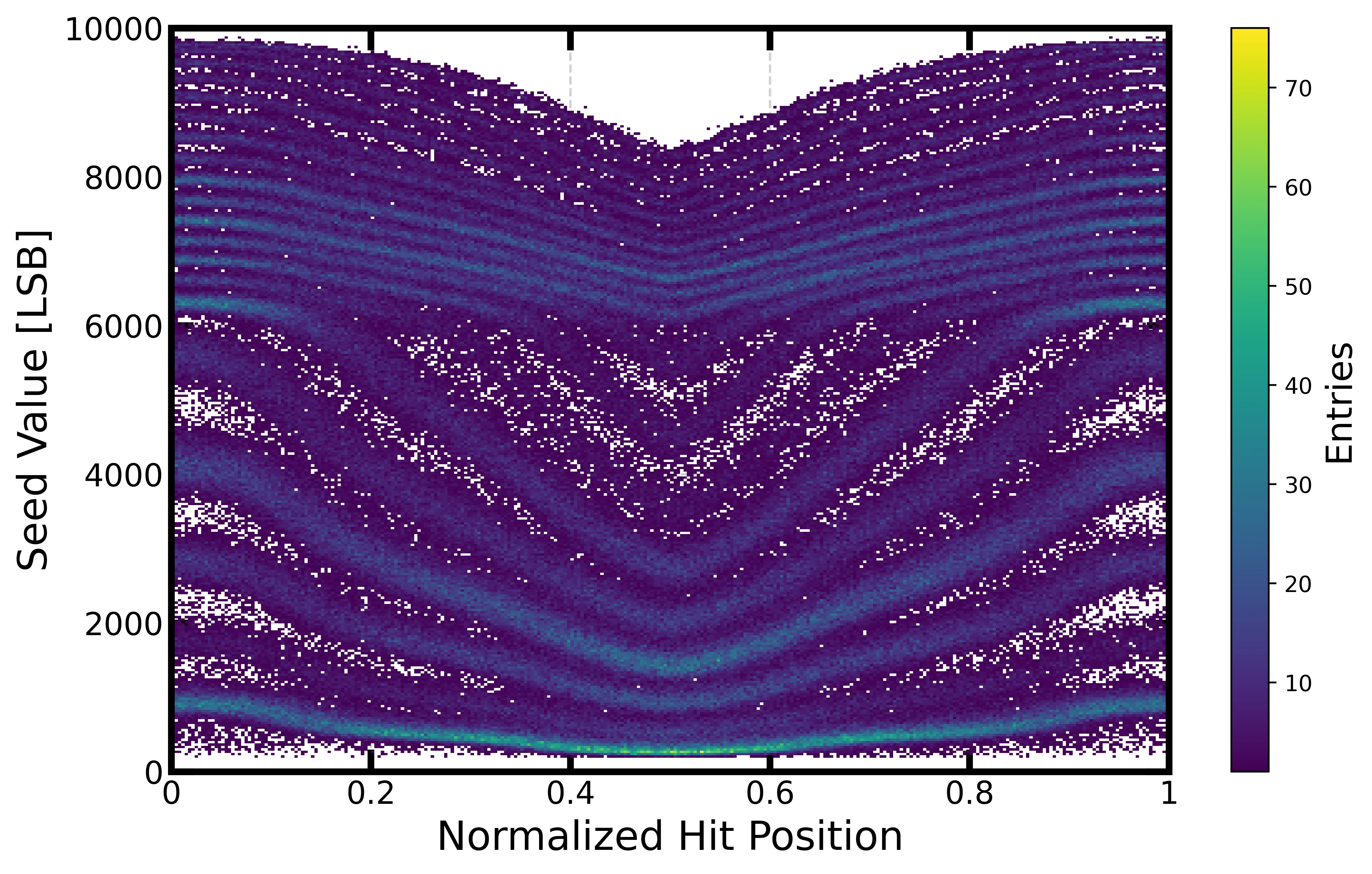

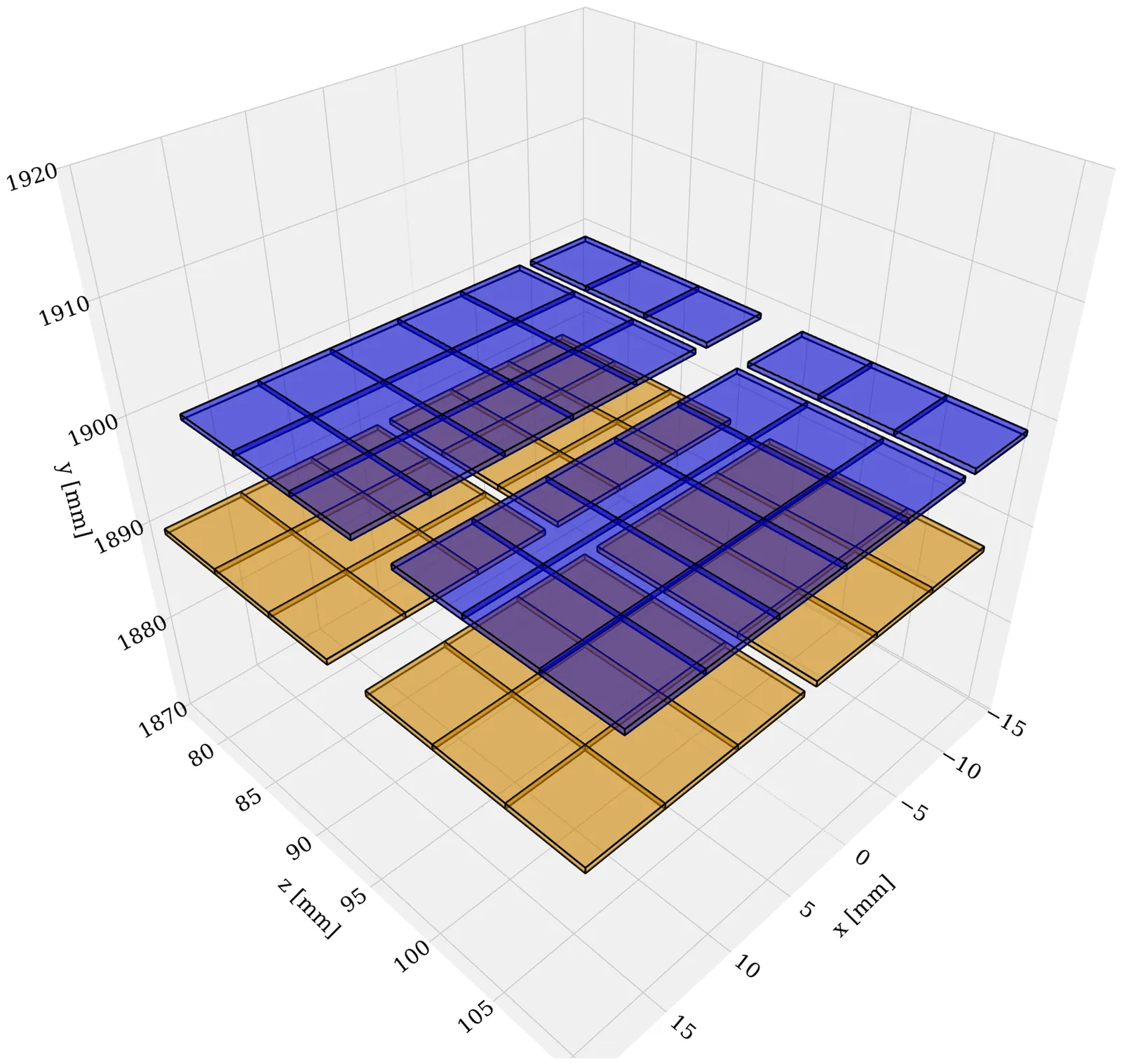

Unsupervised learning has been widely applied to various tasks in particle physics. However, existing models lack precise control over their learned representations, limiting physical interpretability and hindering their use for accurate measurements. We propose the Histogram AutoEncoder (HistoAE), an unsupervised representation learning network featuring a custom histogram-based loss that enforces a physically structured latent space. Applied to silicon microstrip detectors, HistoAE learns an interpretable two-dimensional latent space corresponding to the particle's charge and impact position. After simple post-processing, it achieves a charge resolution of $0.25\,e$ and a position resolution of $3\,μ\mathrm{m}$ on beam-test data, comparable to the conventional approach. These results demonstrate that unsupervised deep learning models can enable physically meaningful and quantitatively precise measurements. Moreover, the generative capacity of HistoAE enables straightforward extensions to fast detector simulations.

The current KM3NeT/ORCA neutrino telescope, still under construction, has not yet reached its full potential in neutrino reconstruction capability. When training any deep learning model, no explicit information about the physics or the detector is provided, thus they remain unknown to the model. This study leverages the strengths of transformers by incorporating attention masks inspired by the physics and detector design, making the model understand both the telescope design and the neutrino physics measured on it. The study also shows the efficacy of transformers on retaining valuable information between detectors when doing fine-tuning from one configurations to another.

Data Quality Monitoring (DQM) is a crucial component of particle physics experiments and ensures that the recorded data is of the highest quality, and suitable for subsequent physics analysis. Due to the extreme environmental conditions, unprecedented data volumes, and the sheer scale and complexity of the detectors, DQM orchestration has become a very challenging task. Therefore, the use of Machine Learning (ML) to automate anomaly detection, improve efficiency, and reduce human error in the process of collecting high-quality data is unavoidable. Since DQM relies on real experimental data, it is inherently tied to the specific detector substructure and technology in operation. In this work, a simulation-driven approach to DQM is proposed, enabling the study and development of data-quality methodologies in a controlled environment. Using a modified version of Delphes -- a fast, multi-purpose detector simulation -- the preliminary realization of a framework is demonstrated which leverages ML to identify detector anomalies as well as localize the malfunctioning components responsible. We introduce MEDIC (Monitoring for Event Data Integrity and Consistency), a neural network designed to learn detector behavior and perform DQM tasks to look for potential faults. Although the present implementation adopts a simplified setup for computational ease, where large detector regions are deliberately deactivated to mimic faults, this work represents an initial step toward a comprehensive ML-based DQM framework. The encouraging results underline the potential of simulation-driven studies as a foundation for developing more advanced, data-driven DQM systems for future particle detectors.

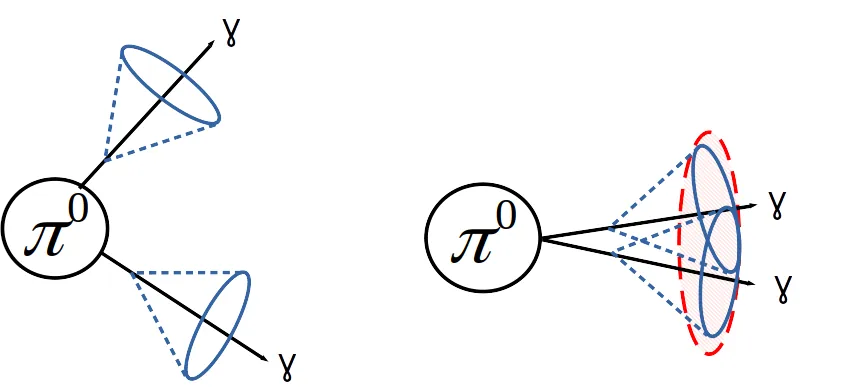

The physics programs of current and future collider experiments necessitate the development of surrogate simulators for calorimeter showers. While much progress has been made in the development of generative models for this task, they have typically been evaluated in simplified scenarios and for single particles. This is particularly true for the challenging task of highly granular calorimeter simulation. For the first time, this work studies the use of highly granular generative calorimeter surrogates in a realistic simulation application. We introduce DDML, a generic library which enables the combination of generative calorimeter surrogates with realistic detectors implemented using the DD4hep toolkit. We compare two different generative models - one operating on a regular grid representation, and the other using a less common point cloud approach. In order to disentangle methodological details from model performance, we provide comparisons to idealized simulators which directly sample representations of different resolutions from the full simulation ground-truth. We then systematically evaluate model performance on post-reconstruction benchmarks for electromagnetic shower simulation. Beginning with a typical single particle study, we introduce a first multi-particle benchmark based on di-photon separations, before studying a first full-physics benchmark based on hadronic decays of the tau lepton. Our results indicate that models operating on a point cloud can achieve a favorable balance between speed and accuracy for highly granular calorimeter simulation compared to those which operate on a regular grid representation.

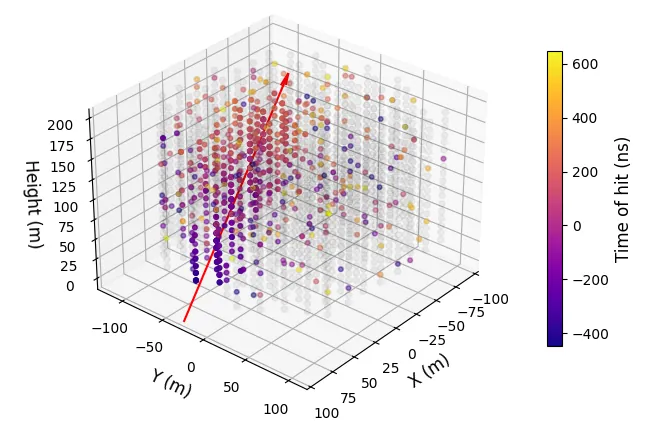

Neutrino telescopes are large-scale detectors designed to observe Cherenkov radiation produced from neutrino interactions in water or ice. They exist to identify extraterrestrial neutrino sources and to probe fundamental questions pertaining to the elusive neutrino itself. A central challenge common across neutrino telescopes is to solve a series of inverse problems known as event reconstruction, which seeks to resolve properties of the incident neutrino, based on the detected Cherenkov light. In recent times, significant efforts have been made in adapting advances from deep learning research to event reconstruction, as such techniques provide several benefits over traditional methods. While a large degree of similarity in reconstruction needs and low-level data exists, cross-experimental collaboration has been hindered by a lack of diverse open-source datasets for comparing methods. We present NuBench, an open benchmark for deep learning-based event reconstruction in neutrino telescopes. NuBench comprises seven large-scale simulated datasets containing nearly 130 million charged- and neutral-current muon-neutrino interactions spanning 10 GeV to 100 TeV, generated across six detector geometries inspired by existing and proposed experiments. These datasets provide pulse- and event-level information suitable for developing and comparing machine-learning reconstruction methods in both water and ice environments. Using NuBench, we evaluate four reconstruction algorithms - ParticleNeT and DynEdge, both actively used within the KM3NeT and IceCube collaborations, respectively, along with GRIT and DeepIce - on up to five core tasks: energy and direction reconstruction, topology classification, interaction vertex prediction, and inelasticity estimation.

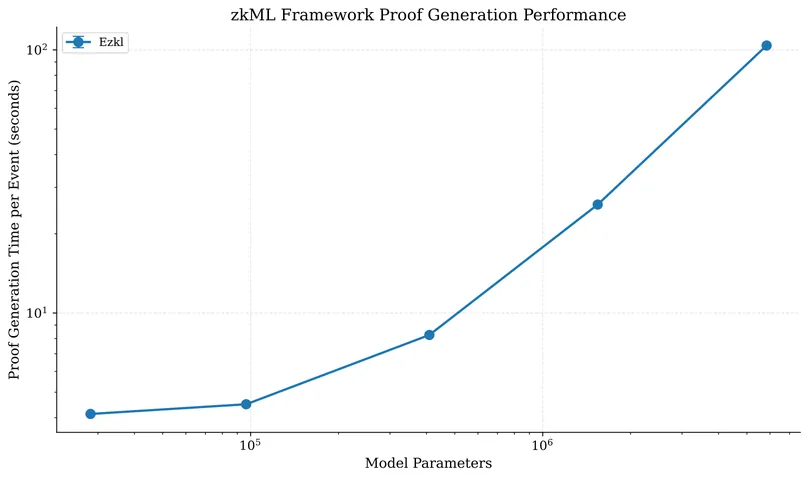

Low latency event-selection (trigger) algorithms are essential components of Large Hadron Collider (LHC) operation. Modern machine learning (ML) models have shown great offline performance as classifiers and could improve trigger performance, thereby improving downstream physics analyses. However, inference on such large models does not satisfy the $40\text{MHz}$ online latency constraint at the LHC. In this work, we propose \texttt{PHAZE}, a novel framework built on cryptographic techniques like hashing and zero-knowledge machine learning (zkML) to achieve low latency inference, via a certifiable, early-exit mechanism from an arbitrarily large baseline model. We lay the foundations for such a framework to achieve nanosecond-order latency and discuss its inherent advantages, such as built-in anomaly detection, within the scope of LHC triggers, as well as its potential to enable a dynamic low-level trigger in the future.

The Run 3 of the LHC brings unprecedented luminosity and a surge in data volume to the LHCb detector, necessitating a critical reduction in the size of each reconstructed event without compromising the physics reach of the heavy-flavour programme. While signal decays typically involve just a few charged particles, a single proton-proton collision produces hundreds of tracks, with charged particle information dominating the event size. To address this imbalance, a suite of inclusive isolation tools have been developed, including both classical methods and a novel Inclusive Multivariate Isolation (IMI) algorithm. The IMI unifies the key strengths of classical isolation techniques and is designed to robustly handle diverse decay topologies and kinematics, enabling efficient reconstruction of decay chains with varying final-state multiplicities. It consistently outperforms traditional methods, with superior background rejection and high signal efficiency across diverse channels and event multiplicities. By retaining only the most relevant particles in each event, the method achieves a 45 % reduction in data size while preserving full physics performance, selecting signal particles with 99% efficiency. We also validate IMI on Run 3 data, confirming its robustness under real data-taking conditions. In the long term, IMI could provide a fast, lightweight front-end to support more compute-intensive selection strategies in the high-multiplicity environment of the High-Luminosity LHC.

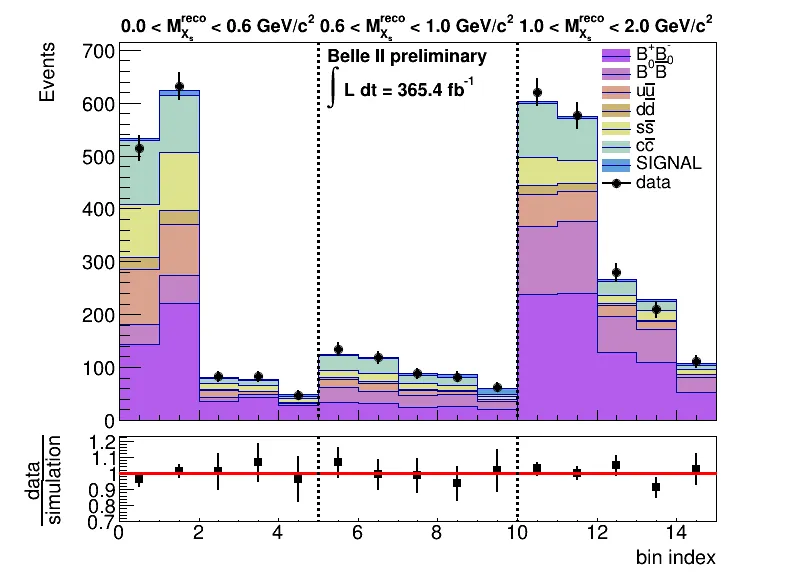

We report the first search for the flavor-changing neutral-current decays $B \rightarrow X_{s} ν\barν$, where $X_{s}$ is a hadronic system with strangeness equal to 1, in data collected with the Belle~II detector at the SuperKEKB asymmetric-energy $e^+e^-$ collider. The data sample corresponds to an integrated luminosity of $365~\textrm{fb}^{-1}$ collected at the $Υ(4S)$ resonance and $43~\textrm{fb}^{-1}$ collected at a center-of-mass energy $60~\textrm{MeV}$ below resonance for estimation of $e^+e^-\to q\bar{q}$ continuum background. One of the $B$ mesons from the $Υ(4S) \to B\bar{B}$ decay is fully reconstructed in a hadronic decay mode. The $B \to X_s ν\barν$ decay is reconstructed with a sum-of-exclusives approach that uses 30 $X_s$ decay modes. This approach provides high sensitivity to the inclusive decay, despite the presence of two undetected neutrinos. The search is performed in three regions of the $X_{s}$ mass, chosen to separate contributions from prominent resonances. We do not observe a significant signal and set upper limits at 90\% confidence level on the partial branching fractions for the regions $0.0 < M_{X_{s}} < 0.6~\textrm{GeV}/c^{2}$, $0.6 < M_{X_{s}} < 1.0~\textrm{GeV}/c^{2}$, and $1.0~\textrm{GeV}/c^{2} < M_{X_{s}}$ of $2.2 \times 10^{-5}$, $9.5 \times 10^{-5}$, and $31.2 \times 10^{-5}$, respectively. Combining the three mass regions, we obtain the upper limit on the branching fraction, $B(B \to X_s ν\barν) < 3.2 \times 10^{-4}$.

We investigate photon--pion discrimination in regimes where electromagnetic showers overlap at the scale of calorimeter granularity. Using full detector simulations with fine-grained calorimeter segmentation of approximately $0.025\times0.025$ in $(η,φ)$, we benchmark three approaches: boosted decision trees (BDTs) on shower-shape variables, dense neural networks (DNNs) on the same features, and a ResNet-based convolutional neural network operating directly on calorimeter cell energies. The ResNet significantly outperformed both baseline methods, achieving further gains when augmented with soft scoring and an auxiliary $ΔR$ regression head. Our results demonstrate that residual convolutional architectures, combined with physics-informed loss functions, can substantially improve photon identification in high-luminosity collider environments in which overlapping electromagnetic showers challenge traditional methods.

2511.10216

2511.10216Charged-hadron distributions in heavy-flavor jets are measured in proton-proton collisions at a center-of-mass energy of $\sqrt{s}$ = 13 TeV collected by the LHCb experiment. Distributions of the longitudinal momentum fraction, transverse momentum, and radial profile of charged hadrons are measured separately in beauty and charm jets. The distributions are compared to those previously measured by the LHCb collaboration in jets produced back-to-back with a $Z$ boson, which in the forward region are primarily light-quark-initiated, to compare the hadronization mechanisms of heavy and light quarks. The observed differences between the heavy- and light-jet distributions are consistent with the heavy-quark dynamics expected to arise from the dead-cone effect, as well as with a hard fragmentation of the heavy-flavor hadron as previously measured in single-hadron fragmentation functions. This measurement provides additional constraints for the extraction of collinear and transverse-momentum-dependent heavy-flavor fragmentation functions and offers another approach to probing the mechanisms that govern heavy-flavor hadronization.

Inspired by the $X(4140)$ structure reported in the $J/ψφ$ system by the CDF experiment in 2009, a series of searches have been carried out in the $J/ψφ$ and $J/ψK$ channels, leading to the claim of ten structures in the $B \rightarrow J/ψφK$ system. This article provides a comprehensive review of experimental developments, from the initial evidence of $X(4140)$ at CDF to the amplitude analyses and diffractive process investigations by the LHCb experiment, as well as theoretical interpretations of these states. A triplet of $J^{PC} = 1^{++}$ states with relatively large mass splittings [about 200~MeV (natural units are adopted)] has been identified in the $J/ψφ$ system by LHCb. Their mass-squared values align approximately linearly with a possible radial quantum number, suggesting that the triplet may represent a radially excited family. For $X(4140)$, the first state in the triplet, its width reported by LHCb is inconsistent with that measured by other experiments, and possible reasons for this discrepancy are discussed. A potential connection between an excess at 4.35~GeV in the $J/ψφ$ mass spectrum reported by the Belle experiment through a two-photon process and a potential spin-2 excess in the LHCb data is also investigated. In addition, potential parallels between the $J/ψφ$ and $J/ψJ/ψ$ systems, both composed of two vector mesons, are discussed. The continued interest in, and complexity of, these systems underscore the necessity of further experimental exploration with increased statistical precision across a variety of experiments, particularly those with relatively flat efficiency across the Dalitz plot. The $J/ψω$, $φφ$, $ρω$, and $ρφ$ systems are mentioned, and the prospects for the $J/ψΥ$ and $ΥΥ$ systems, are also highlighted.

2511.08212

2511.08212A search for long-lived particles using final states including a displaced vertex with low-momentum tracks, large missing transverse momentum, and a jet from initial-state radiation is presented. This search uses proton-proton collision data at a center-of-mass energy of 13 TeV collected by the CMS experiment at the CERN LHC in 2017 and 2018, with a total integrated luminosity of 100 fb$^{-1}$. This analysis adopts specific supersymmetric (SUSY) coannihilation scenarios as benchmark signal models, characterized by a next-to-lightest SUSY particle (NLSP) with a mass difference of less than 25GeV relative to the lightest SUSY particle, assumed to be a bino-like neutralino. In the top squark ($\tilde{\mathrm{t}}$) NLSP model, the NLSP is a long-lived $\tilde{\mathrm{t}}$, while in the bino-wino NLSP scenario, the mass-degenerate NLSPs are a wino-like long-lived neutralino and a short-lived chargino. The search excludes top squarks with masses less than 400$-$1100 GeV and wino-like neutralinos with masses less than 220$-$550 GeV, depending on the signal parameters, including the mass difference, mass, and lifetime of the long-lived particle. It sets the most stringent limits to date for the $\tilde{\mathrm{t}}$ and bino-wino NLSP models.

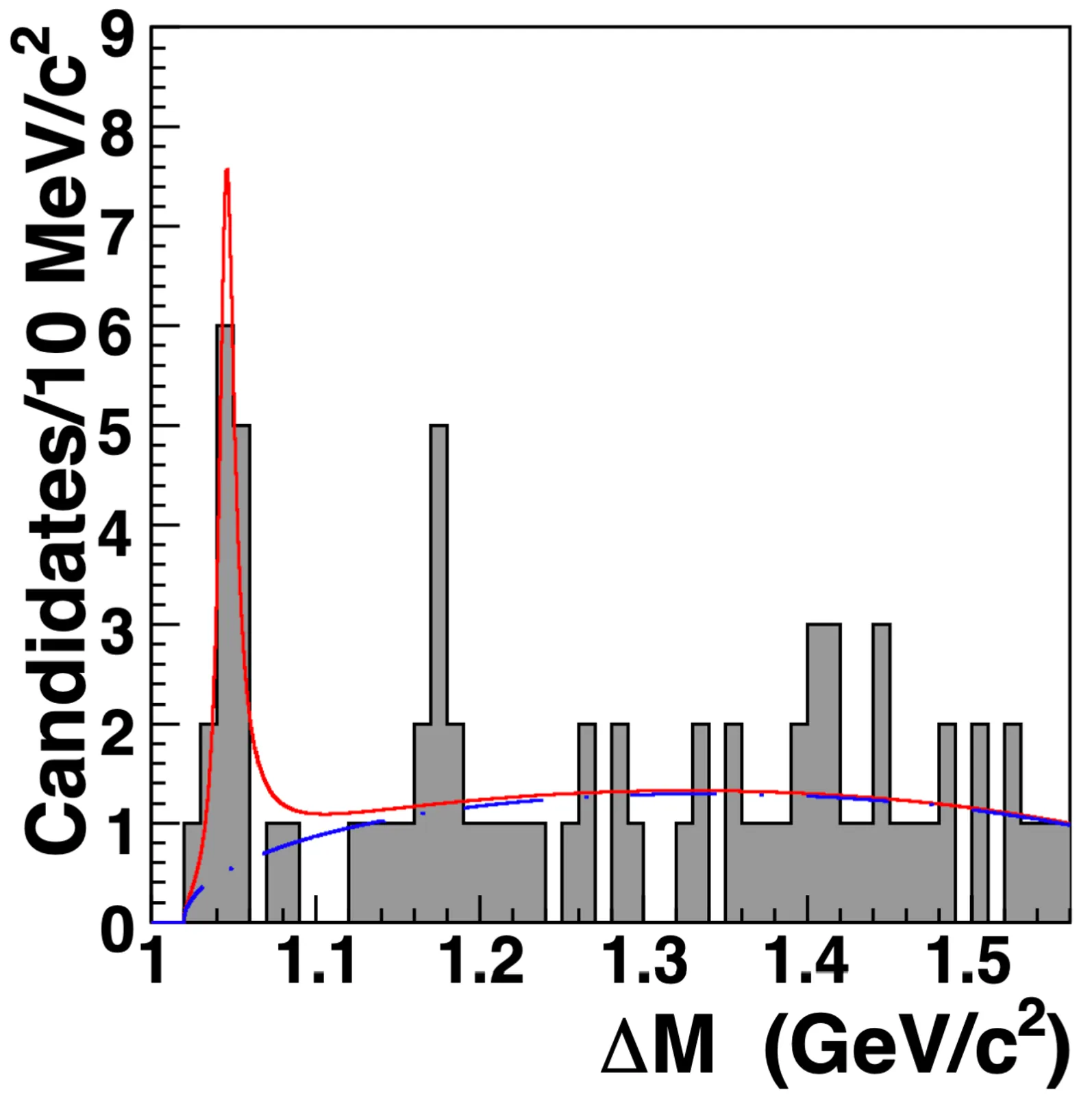

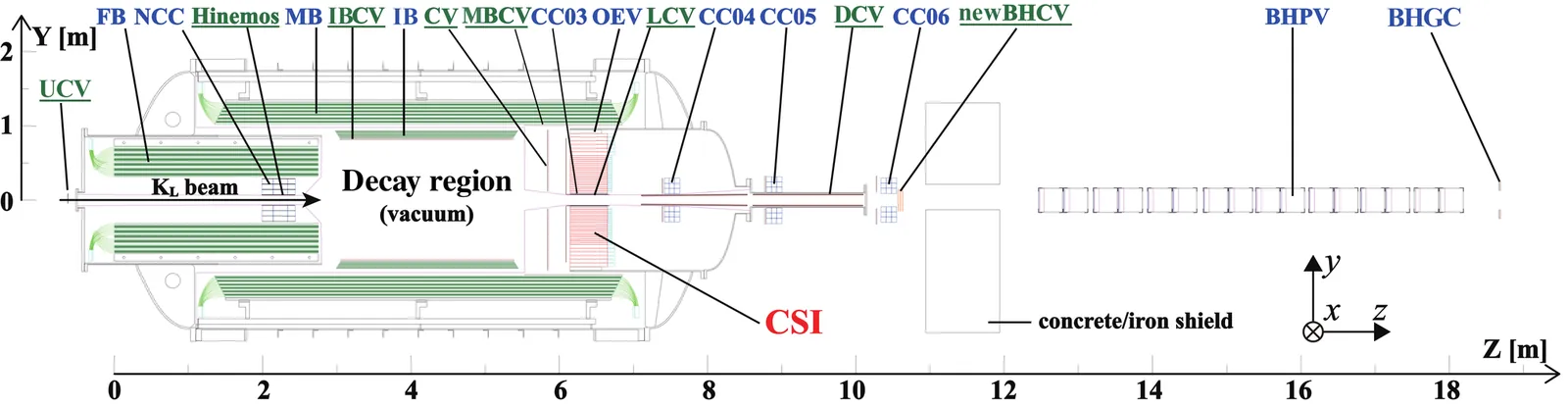

We report a search for an invisible particle $X$ in the decay $K^0_L\rightarrow γX$ ($X \to \text{invisible}$), where $X$ can be interpreted as a massless or massive dark photon. No evidence for $X$ was found, based on 13 candidate events consistent with a predicted background of $12.66 \pm 4.42_{\text{stat.}} \pm 2.13_{\text{syst.}}$ events. Upper limits on the branching ratio of $K^0_L\rightarrow γX$ were set for the $X$ mass range $0 \leq m_X \leq 425$ MeV/$c^2$. For massless $X$, the upper limit was $3.4\times10^{-7}$ at the $90\%$ confidence level, while for massive $X$, the upper limits in the searched mass region ranged from $O(10^{-7})$ to $O(10^{-3})$.

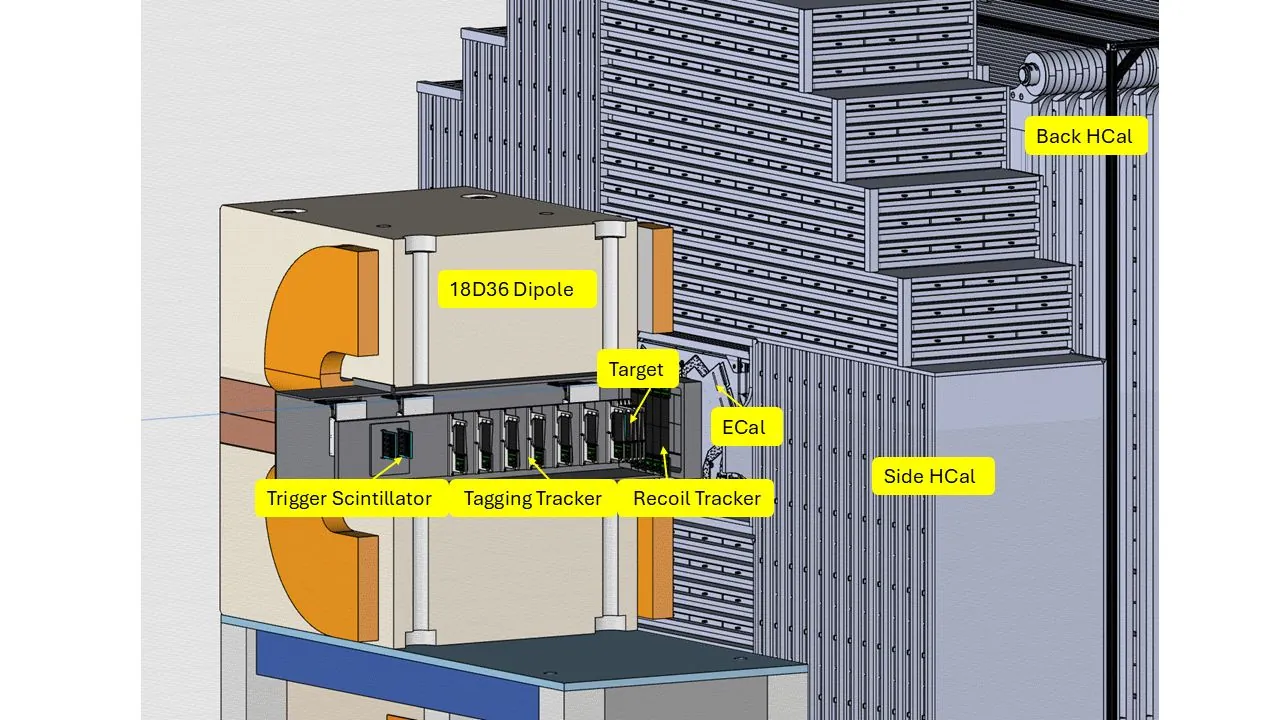

Searching for dark matter (DM) at colliders is one of the biggest challenges in high-energy physics today. Significant efforts have been made to detect DM within the mass range of 1-10,000 GeV at the Large Hadron Collider and other experiments. However, the lower mass range of 0.001-1 GeV remains largely unexplored, despite strong theoretical motivation from thermal dark matter models in that mass range. The Light Dark Matter eXperiment (LDMX) is a proposed fixed-target experiment at SLAC's LCLS-II 8 GeV electron beamline, specifically designed for the direct production of sub-GeV dark matter. The experiment operates on the principle of detecting missing momentum and missing energy signatures. In this talk, we will present the experimental design of LDMX detector and discuss strategies for detecting dark matter. The talk will detail traditional discriminants-based methods using the electromagnetic and hadronic calorimeters as a veto for Standard Model processes. Additionally, the application of advanced machine learning techniques, such as boosted decision trees and graph neural networks, for distinguishing signal from background will be discussed.

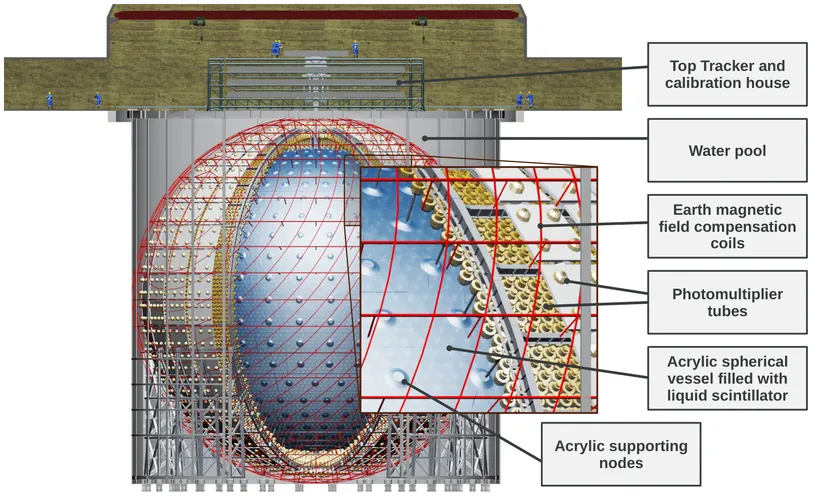

Geoneutrinos, which are antineutrinos emitted during the decay of long-lived radioactive elements inside Earth, serve as a unique tool for studying the composition and heat budget of our planet. The Jiangmen Underground Neutrino Observatory (JUNO) experiment in China, which has recently completed construction, is expected to collect a sample comparable in size to the entire existing world geoneutrino dataset in less than a year. This paper presents an updated estimation of sensitivity to geoneutrinos of JUNO using the best knowledge available to date about the experimental site, the surrounding nuclear reactors, the detector response uncertainties, and the constraints expected from the TAO satellite detector. To facilitate comparison with present and future geological models, our results cover a wide range of predicted signal strengths. Despite the significant background from reactor antineutrinos, the experiment will measure the total geoneutrino flux with a precision comparable to that of existing experiments within its first few years, ultimately achieving a world-leading precision of about 8% over ten years. The large statistics of JUNO will also allow separation of the Uranium-238 and Thorium-232 contributions with unprecedented precision, providing crucial constraints on models of formation and composition of Earth. Observation of the mantle signal above the lithospheric flux will be possible but challenging. For models with the highest predicted mantle concentrations of heat-producing elements, a 3-sigma detection over six years requires knowledge of the lithospheric flux to within 15%. Together with complementary measurements from other locations, the geoneutrino results of JUNO will offer cutting-edge, high-precision insights into the interior of Earth, of fundamental importance to both the geoscience and neutrino physics communities.

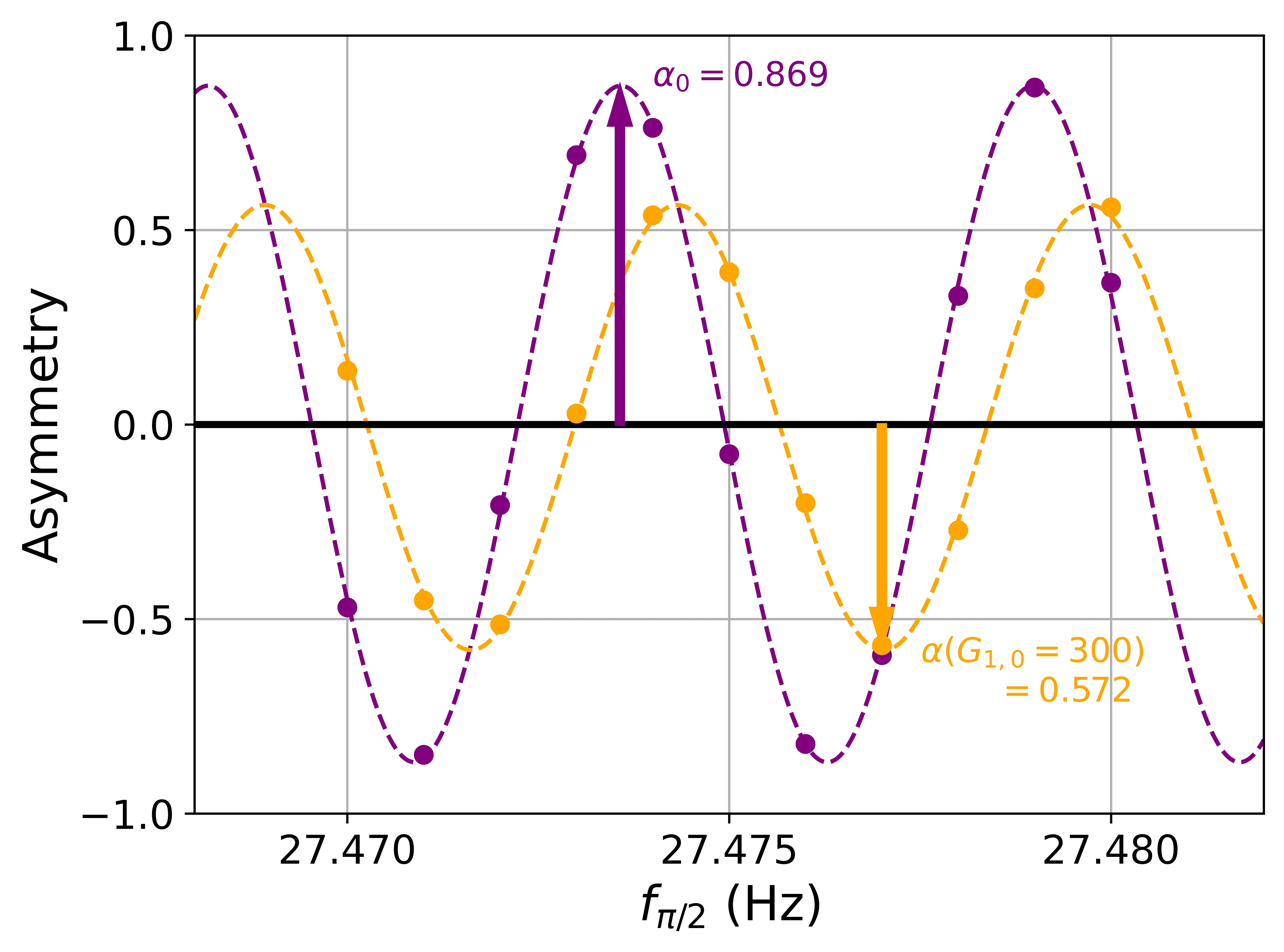

We present a novel method for extracting the energy spectrum of ultracold neutrons from magnetically induced spin depolarization measurements using the n2EDM apparatus. This method is also sensitive to the storage properties of the materials used to trap ultracold neutrons, specifically, whether collisions are specular or diffuse. We highlight the sensitivity of this new technique by comparing the two different storage chambers of the n2EDM experiment. We validate the extraction by comparing to an independent measurement for how this energy spectrum is polarized through a magnetic-filter, and finally, we calculate the neutron center-of-mass offset, an important systematic effect for measurements of the neutron electric dipole moment.